Semantic segmentation vs Instance Segmentation are two approaches in computer vision that allow machines to understand and interpret visual data at the pixel level. Even though both techniques segment images into meaningful regions, they are different in how they identify and differentiate objects within scenes. Understanding the difference between semantic and instance segmentation is important to select the right approach for your computer vision project, whether you work on autonomous driving systems, medical image analysis, or retail automation.

Semantic segmentation places each pixel into predefined categories, creating a comprehensive map where every pixel receives a class label. This technique treats all objects of the same class as one unified entity without distinguishing between individual instances. For example, in a street scene, all car pixels would be labeled as “vehicle” regardless of how many separate cars exist in the image. Semantic segmentation classifies pixels based on their category without considering individual object boundaries.

Semantic segmentation allows machines to understand the overall composition of a scene by classifying regions based on their semantic meaning. Segmentation in computer vision divides an image into multiple segments or regions, where each segment corresponds to different objects or parts of the scene. This foundational task plays a crucial role in enabling machines to analyze images at the pixel level, making it possible to identify object boundaries, classify regions, and understand relationships with visual data.

Segmentation provides the framework for transforming raw pixel data into structured and meaningful information that computer vision systems can process for various applications. This technique is growing with deep learning advancements, giving more accurate and sophisticated analyses of visual scenes. Image segmentation continues to evolve with new architectures and methodologies. High-quality training data is a fundamental requirement for successful segmentation models, which is why many organizations rely on professional data labeling services to ensure consistency, accuracy, and scalability in their projects.

What Is Instance Segmentation?

This approach combines object detection and semantic segmentation methodologies, first identifying individual object proposals through region proposal networks, then generating pixel-level masks for each detected instance. Instance segmentation enables precise tracking, counting, and analysis of individual objects, making it invaluable for complex computer vision tasks.

Instance segmentation typically requires more computational resources than semantic approaches, but the trade-off provides critical capabilities for applications where distinguishing between individual objects matters. Instance segmentation takes a two-stage approach that first detects objects and then segments them individually. Instance segmentation is applied in scenarios ranging from medical imaging to robotics, where precise boundary delineation for each instance stands critical. When organizations need to perform instance segmentation, they must consider both computational resources and the specific requirements of their application.

Semantic Segmentation vs Instance Segmentation: Core Differences

Understanding the difference between semantic segmentation vs instance segmentation is important to know how each approach handles object identification and classification. Segmentation distinguishes between these two approaches based on whether individual object instances need identification or if categorical classification suffices for the application. To better understand image segmentation in computer vision, we have to examine the key differences of these image segmentation techniques in detail. Semantic vs instance segmentation represents a fundamental choice in computer vision architecture. The comparison of instance vs semantic segmentation reveals different strengths for different applications. Here is a detailed table highlighting the differences:

| Aspect | Semantic Segmentation | Instance Segmentation |

|---|---|---|

| Definition | Classifies each pixel into a category without distinguishing individual objects | Distinguishes between different object instances using a unique mask for each |

| Granularity | Treats all objects of the same class as one region | Assigns and differentiates each individual object |

| Object Counting | Cannot count individual objects | Enables precise and accurate counting of each object instance |

| Overlapping Objects | Merges overlapping objects of the same class into one region | Separates overlapping objects with distinct boundaries |

| Output | Single segmentation map with class labels | Multiple masks with instance IDs, bounding boxes, and class labels |

| Computational Cost | Lower (faster processing, less memory usage) | Higher (more complex and slower processing) |

| Model Complexity | Simpler encoder–decoder architectures | More complex multi-stage detection models |

| Training Data | Requires pixel-level class annotations | Requires instance-level masks with unique IDs |

| Real-time Performance | Better suited for real-time applications | Challenging for real-time use without optimization |

| Best Use Cases | Scene understanding, background classification | Object tracking, counting, and individual object analysis |

Both segmentation tasks require careful consideration of your project requirements. Different segmentation methods offer unique advantages depending on whether you need instance-level or class-level understanding. Modern vision models can implement either approach depending on the specific application needs. Understanding types of segmentation helps in selecting the most appropriate technique for your use case. Panoptic segmentation combines both approaches to provide comprehensive scene understanding.

How Semantic Segmentation Works?

Semantic segmentation uses deep learning models, especially Convolutional Neural Networks (CNNs), which function as encoder-decoder architectures. The encoder extracts features from the input image through multiple convolution and pooling operations, while the decoder reconstructs the segmentation map by upsampling these features back to the original image resolution. Training these models requires precise annotations, which is where common types of image annotation techniques become essential for creating high-quality datasets like semantic and instance segmentation tasks. Semantic segmentation proves highly effective for scene understanding tasks where instance distinction is not required.

Popular semantic segmentation architectures include:

- Fully Convolutional Networks (FCN): One of the first architectures without fully connected layers, FCN can process images of any size by replacing traditional fully connected layers with 1×1 convolutional layers. It uses skip connections to combine information from different network layers, allowing the model to make predictions that respect both local details and global structure.

- U-Net: It was originally developed for biomedical image segmentation. U-Net features a U-shaped architecture with an encoder path that captures context and a decoder path that enables precise localization. Skip connections between corresponding encoder and decoder layers help in preserving spatial information lost during downsampling, making the U-Net highly effective even with limited training data.

- DeepLab: This architecture uses atrous convolution (also called dilated convolution) to capture multi-scale contextual information without reducing spatial resolution. DeepLab’s use of Atrous Spatial Pyramid Pooling (ASPP) allows it to effectively segment objects at various scales.

- SegNet: It features an encoder-decoder structure specifically designed for scene understanding. It uses pooling indices from the encoder to perform upsampling in the decoder, providing efficient inference while maintaining segmentation quality. Each semantic model brings unique architectural innovations to address specific challenges in pixel-level classification.

Applications of Semantic Segmentation

Semantic segmentation is ideal in scenarios where understanding the overall composition of a scene matters more than identifying individual objects:

Autonomous Vehicles

Alt text- Semantic segmentation of urban street scene showing roads, sidewalks, vehicles, and traffic signs

Semantic segmentation helps self-driving cars in understanding road scenes by classifying pixels like road, sidewalk, vehicles, pedestrians, traffic signs, and sky. This provides the vehicle with an understanding of its environment for safe navigation.

Medical Imaging

In healthcare, semantic segmentation assists doctors in identifying and classifying different tissue types, organs, or pathologies in medical scans such as MRI, CT, or X-ray images. It enables precise analysis for diagnosis and treatment planning without needing to differentiate between individual cells or lesions of the same type.

Satellite Imagery Analysis

For land cover classification, urban planning, and environmental monitoring, semantic segmentation categorizes terrain into classes like vegetation, water bodies, buildings, and roads, providing valuable insights for geographic information systems.

Agriculture

Precision farming applications use semantic segmentation to analyze crop health, differentiate between crops and weeds, and monitor field conditions for optimized resource management.

Applications of Semantic Segmentation

Instance segmentation involves a multi-step process that combines object detection and pixel-level classification:

- Object Detection: The model first identifies bounding boxes around each object instance within the image, determining where objects reside.

- Pixel Classification: Similar to semantic segmentation, the model then classifies pixels within each detected region, but maintains the distinction between individual object instances.

- Mask Generation: Instance segmentation masks at the pixel level that precisely outline the boundaries of each detected object instance. Segmentation labels each pixel within the mask with both class and instance information, enabling comprehensive object analysis. Instance segmentation would assign unique identifiers to each object, even if they belong to the same class. Understanding where the instance it belongs helps in tracking and analyzing individual objects throughout a scene.

Applications of Instance Segmentation

Instance segmentation models focus exclusively on detecting and generating segmentation masks for individual things. Instance segmentation is good in scenarios requiring precise identification and analysis of individual objects:

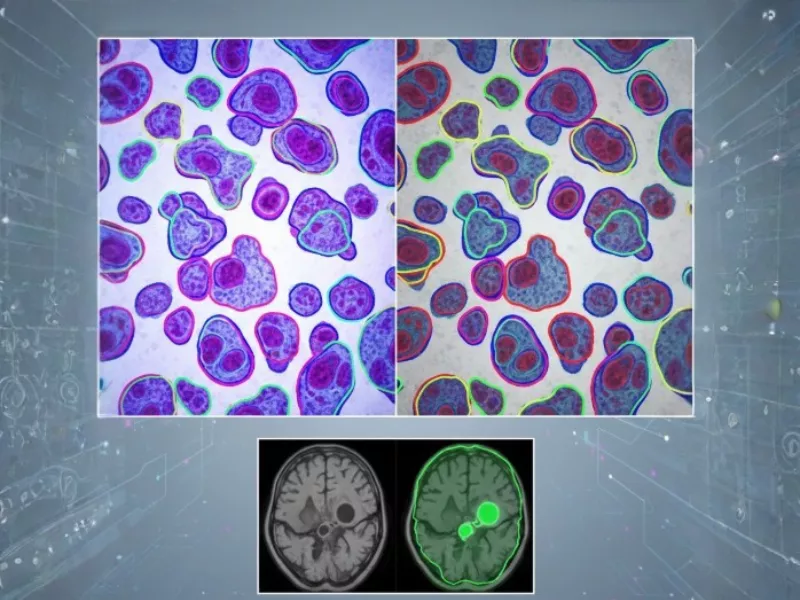

Medical Imaging

In healthcare, instance segmentation helps in the precise identification of individual cells, tumors, or organs, enabling accurate counting, measurement, and analysis, which is crucial for diagnosis and personalized treatment planning.

Robotics

Robots use instance segmentation to recognize and manipulate individual objects in cluttered environments, enabling tasks like picking and sorting in warehouse automation or manufacturing quality control.

Retail Analytics

Instance segmentation is applied for shelf detection, individual customers and products, analyzing shopping behavior, monitoring inventory, and optimizing store layouts.

Surveillance and Security

Security systems employ instance segmentation to detect, track, and identify individuals or objects of interest across video feeds.

Agriculture

Advanced farming systems use instance segmentation to count individual plants or fruits, monitor individual crop health, and automate harvesting processes.

Real-Life Scenario Comparisons

To better understand when to use instance and semantic segmentation, let’s look for semantic segmentation vs instance segmentation examples across different industries:

Scenario 1: Autonomous Driving – Highway Traffic

Alt text: Highway traffic with bounding boxes vs instance segmentation on vehicles

Situation: A self-driving car navigates a busy highway with multiple vehicles, lane markings, and road signs.

Semantic Segmentation Approach: The system classifies all pixels into categories like “road,” “lane marking,” “vehicle,” “sky,” and “vegetation.” All cars on the highway receive the simple label “vehicle” without distinction between them. This provides the car with basic environmental understanding—where the road exists, where it’s safe to drive, and what areas contain vehicles.

Instance Segmentation Approach: The system not only identifies “vehicle” pixels but also distinguishes each car individually as “vehicle_1,” “vehicle_2,” “vehicle_3,” etc. This enables the autonomous system to track each vehicle’s trajectory independently, predict individual movements, calculate safe following distances, and make lane-change decisions based on specific vehicle behaviors.

Winner for this scenario: Instance segmentation won here because tracking individual vehicles remains crucial for safety and decision-making.

Scenario 2: Medical Imaging – Tumor Detection

Situation: Doctors analyze a CT scan to identify and measure tumors in a patient’s organ.

Semantic Segmentation Approach: The system labels all tumor tissue pixels as “tumor” and healthy tissue as “normal tissue.” While this helps visualize the overall extent of cancerous tissue, it cannot distinguish between separate tumor masses, making it impossible to count tumors or track individual growth patterns.

Instance Segmentation Approach: The system identifies each tumor individually, labeling them as “tumor_1,” “tumor_2,” “tumor_3,” etc. This allows doctors to count the exact number of tumors, measure each one’s size separately, track individual tumor growth over time, and plan targeted treatments for specific lesions.

Winner for this scenario: Instance segmentation won for accurate diagnosis, treatment planning, and monitoring individual tumor response to therapy.

Scenario 3: Agriculture – Crop Field Analysis

Situation: Farmers monitor a large agricultural field using drone imagery to assess crop health and plan interventions.

Semantic Segmentation Approach: Semantic segmentation assigns pixels into “healthy crop,” “diseased crop,” “soil,” and “weeds.” This provides farmers with an overview of field conditions, showing which areas have healthy crops versus problem zones. Farmers can see percentage coverage and general distribution patterns without identifying individual plants.

Instance Segmentation Approach: The system identifies each plant, labeling them separately. This enables precise plant counting, individual growth tracking, per-plant health assessment, and targeted treatment of specific diseased plants rather than entire zones.

Winner for this scenario: Semantic segmentation often proves sufficient and more cost-effective for large-scale field monitoring, unless the application requires individual plant counting (like fruit counting for yield estimation), where instance segmentation becomes necessary.

Deep Learning Models: Semantic Segmentation vs Instance Segmentation in Deep Learning

Deep learning evolution has changed both semantic and instance segmentation approaches:

Semantic Segmentation Models

Modern semantic segmentation architectures use CNNs with encoder-decoder structures. The encoder progressively reduces spatial dimensions while extracting hierarchical features, and the decoder reconstructs the segmentation map through upsampling operations.

Key techniques in semantic segmentation include:

- Skip Connections: Directly connect encoder layers to decoder layers, preserving fine-grained details lost during downsampling

- Atrous Convolution: Expands the receptive field without reducing spatial resolution or increasing parameters

- Multi-scale Processing: Captures contextual information at various scales for robust segmentation

Instance Segmentation Models

Instance segmentation models follow a two-stage approach taken from object detection frameworks, along with additional mask prediction capabilities:

Stage 1: Region proposal generation identifies potential object locations

Stage 2: Classification, bounding box refinement, and mask prediction for each proposed region

Advanced instance segmentation architectures use transformer-based approaches alongside CNNs, using self-attention mechanisms to capture long-range dependencies and global context more effectively.

Future Trends in Semantic vs Instance Segmentation

Image segmentation is a growing trend, driven by breakthroughs in deep learning architectures, computational efficiency, and cross-domain applications. As organizations increasingly adopt AI-powered vision systems, understanding emerging trends helps in making strategic technology investments that remain relevant for years to come.

Unified Segmentation Models

The boundary between instance segmentation and semantic segmentation is becoming increasingly blurred. Panoptic segmentation has emerged as a comprehensive solution that combines both approaches into a single unified framework. This technique labels every pixel in an image, treating “stuff” classes (like sky, road, grass) with semantic segmentation while handling “things” classes (cars, people, animals) with instance segmentation.

Research teams at leading AI labs are developing more efficient panoptic architectures that reduce computational overhead while maintaining high accuracy. These models promise to simplify deployment pipelines by eliminating the need to maintain separate semantic and instance segmentation systems. Organizations working on autonomous systems, smart city infrastructure, or comprehensive scene understanding applications will benefit significantly from this convergence.

Transformer-Based Architectures Reshaping Segmentation

Vision transformers have revolutionized how segmentation models process visual information. Unlike traditional convolutional neural networks that process images through local receptive fields, transformers use self-attention mechanisms to capture global context and long-range dependencies within images. Models like Segmenter, SegFormer, and Mask2Former demonstrate superior performance on complex segmentation tasks while requiring less training data than their CNN counterparts.

The shift toward transformer architectures enables better handling of objects at varying scales, improved performance on occluded objects, and more robust generalization across different domains. As these architectures mature and become more computationally efficient, they will likely become the default choice for production segmentation systems across industries.

Real-Time Segmentation on Edge Devices

The demand for on-device processing continues to grow, driven by privacy concerns, latency requirements, and connectivity limitations. Recent innovations in model compression, quantization, and neural architecture search have made it possible to deploy sophisticated segmentation models on mobile devices, IoT sensors, and edge computing platforms.

Lightweight architectures specifically designed for mobile deployment—such as MobileNetV3-based segmentation models and efficient transformer variants—can now perform real-time segmentation on smartphones without compromising accuracy significantly. This democratization of advanced computer vision enables new applications in augmented reality, mobile health diagnostics, and consumer-facing AI products.

Self-Supervised and Few-Shot Learning

Traditional segmentation models require massive amounts of pixel-level annotated data, which remains expensive and time-consuming to produce. Self-supervised learning approaches are addressing this challenge by enabling models to learn robust representations from unlabeled data. These pre-trained models can then be fine-tuned on smaller labeled datasets, dramatically reducing annotation requirements.

Few-shot segmentation takes this further by enabling models to segment new object classes after seeing only a handful of examples. This capability is particularly valuable for specialized applications where collecting large annotated datasets proves impractical—such as rare medical conditions, industrial defect detection, or biodiversity monitoring in ecology.

Video Segmentation and Temporal Consistency

As applications move from static images to video streams, maintaining temporal consistency across frames becomes critical. Modern video segmentation models leverage temporal information to improve accuracy and reduce computational costs by propagating segmentation masks across frames rather than processing each frame independently.

Applications in video surveillance, sports analytics, autonomous navigation, and content creation increasingly require this temporal awareness. Advanced video segmentation systems can track object instances across frames, handle occlusions gracefully, and maintain consistent boundaries even as objects move or change appearance.

3D and Multi-Modal Segmentation

The integration of multiple data modalities is opening new frontiers in segmentation technology. Systems that combine RGB images with depth sensors, LiDAR point clouds, thermal imaging, or radar data achieve more robust and accurate segmentation than single-modality approaches.

In autonomous vehicles, fusing camera images with LiDAR data provides precise 3D understanding of the environment. Medical imaging benefits from combining different scanning modalities (CT, MRI, PET) to improve diagnostic accuracy. These multi-modal approaches represent the future of robust computer vision systems that can operate reliably in challenging real-world conditions.

Automated Annotation and Active Learning

The annotation bottleneck continues to constrain the development of segmentation systems. AI-assisted annotation tools that leverage pre-trained models to suggest initial segmentations are significantly reducing human annotation time and costs. Active learning frameworks identify the most informative samples for annotation, ensuring that limited annotation budgets deliver maximum model improvement.

Interactive segmentation tools that allow annotators to refine masks through simple clicks or scribbles are making the annotation process more efficient and accessible. These technologies are crucial for organizations scaling their computer vision capabilities without proportionally scaling annotation teams.

Explainable and Interpretable Segmentation

As segmentation models deploy in critical applications like medical diagnosis and autonomous driving, understanding model decisions becomes increasingly important. Research into explainable AI for segmentation focuses on visualization techniques, attention mechanisms, and uncertainty quantification that help users understand why models make specific segmentation decisions.

These interpretability tools enable domain experts to validate model behavior, identify failure modes, and build trust in AI systems. Regulatory frameworks in healthcare and automotive sectors are increasingly requiring such explainability capabilities for AI deployment.

How to Choose between Semantic and Instance Segmentation?

Selecting the appropriate segmentation is a technique that requires careful consideration of your specific requirements:

Choose Semantic Segmentation When:

- You need to understand overall scene composition

- Individual object distinction is not necessary

- Computational efficiency is a priority

- You work with limited labeled data

- You analyze countless elements like sky, road, or vegetation

- Your focus stays on material classification or background separation

Choose Instance Segmentation When:

- Counting individual objects proves essential

- Tracking objects across frames becomes required

- Analyzing individual object properties matters

- Handling overlapping or occluded objects remains necessary

- Precise boundary delineation for each instance stands critical

- Applications involve object manipulation or individual monitoring

Consider Hybrid Approaches (Panoptic Segmentation) When:

- Both scene understanding and instance detection are needed

- Complete visual scene interpretation is required

- You work on autonomous systems requiring environmental awareness

Conclusion

The question of semantic segmentation and instance segmentation influences your computer vision model in determining how it should analyze visual data. In machine learning, the semantic segmentation process involves efficient pixel-level classification, ideal for analyzing scene composition. Instance segmentation involves instance-level detail that assists in object counting, tracking, and analysis. You have different choices which you can use depending on your need, so choose wisely and explore semantic vs instance segmentation to ease your work.

Frequently Asked Questions

Can semantic segmentation separate individual objects?

No, semantic segmentation cannot separate individual objects of the same class. This limitation starts from its design, which assigns the same class label to all pixels in an image belonging to a particular category without differentiating between separate instances.

What industries use semantic segmentation vs instance segmentation?

Manufacturing, robotics, security, and satellite imagery analysis use both techniques based on specific task requirements. The key differentiator determines whether the application needs an instance-level distinction or just a categorical classification.

When to choose instance segmentation over semantic segmentation?

Choose instance segmentation over semantic when tasks demand distinguishing individual objects, such as tracking pedestrians in crowds, counting vehicles in traffic, or enabling precise robotic interactions, as instance segmentation distinguishes objects and provides unique IDs and boundaries for each instance despite higher computational costs.

How does panoptic segmentation combine both approaches?

Panoptic segmentation combines semantic and instance approaches by labeling every pixel: semantic for “stuff” classes like roads or sky without instance separation, and instance for “things” like cars or people with unique IDs. A fusion module merges outputs from separate semantic and instance heads into a unified map, ideal for comprehensive scene understanding in autonomous driving.

How does class imbalance affect training data strategies for each?

Semantic tasks use weighted loss for rare “stuff” classes like sky, while instance segmentation needs balanced instance sampling per image to prevent dominant-object bias, a data preparation gap in annotation guidelines.

What quantization techniques optimize instance segmentation for mobile deployment?

Post-training quantization reduces Mask R-CNN model weights from FP32 to INT8, cutting inference time by 4x with minimal accuracy drop, unlike simpler semantic models that quantize more easily—details absent from real-time performance discussions.

What data formats do you support?

AnnotationBox supports COCO, YOLO, PASCAL, and other formats for seamless integration into your ML workflows, handling high-density images with no object limit.

What is your accuracy rate and quality process?

We achieve 95%+ accuracy through a 4-step process: project assessment, sample labeling, team training, and monitored production with quality analysts ensuring consistent high standards.

What ROI can I expect from outsourcing annotation?

Outsourcing boosts model accuracy by up to 95% and cuts annotation time by 30%, accelerating projects like deforestation monitoring by 50%

- Financial Data Annotation: A Complete Guide for Banking and Finance - January 12, 2026

- Semantic Segmentation vs Instance Segmentation: Key Differences - December 18, 2025

- Human Annotation: 3 Edge Cases Automation Misses - December 4, 2025