LiDAR technology, or Light Detection and Ranging, is a remote sensing technology that uses laser light to measure distances and create detailed 3D maps of objects and the environment. A LiDAR system emits laser pulses, and a receiver captures the reflected light to measure the distance to objects by timing the return.

The system plays a key role in autonomous vehicles. It detects and maps nearby objects, including pedestrians, road dividers, other vehicles, and buildings. The role of LiDAR annotation in autonomous vehicles is massive.

In this blog, we will learn how this type of annotation plays a major role in the operation of autonomous vehicles, what LiDAR annotation means, and the process.

It is necessary to understand what LiDAR annotation refers to before moving on to its role in autonomous vehicles. LiDAR annotation is the process of labeling 3D point cloud data to identify and categorize objects within a scene. The method provides structure and meaning to raw lidar data, allowing artificial intelligence (AI) and machine learning models to understand and interpret the 3D environment.

The key aspects of LiDAR annotation include:

- 3D point clouds – LiDAR sensors generate massive point clouds. These are the collections of 3D coordinates. It is used to define surfaces and objects in a scene.

- Labeling objects – The primary objective of the process is to label these points to create a more understandable dataset. Drawing 3D bounding boxes, assigning semantic labels to every point, or tracking objects over time helps label objects

- Training machine learning models – Labeled data is used to train AI models, helping them recognize and respond to their surroundings accurately. AI training for self-driving cars is essential for better outcomes.

As discussed earlier, the annotation process plays a pivotal role in the functioning of autonomous vehicles. The following section explains the role of LiDAR annotation in autonomous vehicles.

Understanding the Role of LiDAR Annotation in Autonomous Vehicles

The LiDAR annotation process is important for autonomous vehicles. The process helps create structured datasets to train the AI to understand the surroundings. It is used to label pedestrians, cars, and traffic signs. Consequently, it helps the car perceive the world properly. Here’s a detailed insight into the role of LiDAR annotation in autonomous vehicles:

A. Object Identification and Classification

The process helps identify and label objects in a 3D point cloud. The objects include other vehicles, pedestrians, and cyclists, thus providing the necessary data for AI models to recognize and classify them.

B. Environmental Understanding

Segmenting the point cloud into meaningful objects and features helps the vehicle understand the environment properly. The process is crucial for tasks like identifying road boundaries, lane markings, and free space for proper autonomous vehicle navigation.

C. Collision Avoidance and Path Planning

Proper object labeling plays a critical role in helping vehicles understand and plan safe trajectories and avoid collisions. Accurate LiDAR annotations help reduce false positives, leading to more reliable autonomous vehicle performance, thus allowing vehicles to navigate properly.

D. Training Machine Learning Models

Using high-quality annotated data to train machine learning algorithms is essential. This acts as the foundation for powering the decision-making of autonomous vehicles. The vehicles will not have the right data without proper labels. Raw autonomous vehicle sensor data is unstructured. Therefore, it cannot be used to power autonomous vehicles.

E. Enabling Perception in Various Conditions

LiDAR provides depth and spatial accuracy, similar to annotating images. This is important because it improves data reliability, even in low-light conditions where cameras might struggle. Proper 3D point cloud annotation is essential to ensure that the vehicle’s system can accurately interpret reliable data in all weather and lighting conditions.

High-quality LiDAR annotation creates the foundation for training AI and machine learning models in autonomous vehicles. To understand the process better, we need to learn about the basic components of LiDAR systems.

Understanding the Basic Components of LiDAR Systems

A few components of a LiDAR system help achieve accurate results. It is necessary to know about them to gain a better understanding of the process. Here’s a look at the basic components of LiDAR systems:

A. Laser

This is the source that emits pulses of laser light (often near-infrared) for distance measurement based on the timing of reflections from objects.

B. Scanner

The scanner mechanically or electronically directs a laser beam in various directions to scan the environment and collect spatial data, creating a 3D point cloud.

C. Detector

The detector receives reflected light pulses and converts them into electrical signals for analysis. Common detector types include photodiodes and avalanche photodiodes for high sensitivity.

D. Processing Unit

It analyzes the electrical signals, converts them into usable spatial information, and creates 3D models of the surroundings. The unit can include microcontrollers or specialized processors.

Companies like Annotation Box offer data labeling services to help train AI models for self-driving cars. Availing such services reduces annotation time, avoids annotation errors, and gets things done faster. The following section will help you understand the ways LiDAR annotation is done.

How Is LiDAR Data Annotation Done?

The LiDAR data annotation process follows the following key steps:

A. Data Loading and Preprocessing

The raw data, consisting of 3D point cloud data collected by LiDAR sensors, is loaded into specialized annotation software. Preprocessing is used to filter out noise and align multi-sensor datasets. It helps in ensuring compatibility with the data format.

B. Initial Segmentation

The point cloud is then segmented roughly into general regions of interest like roads, buildings, vehicles, and pedestrians. You can use automated data annotation or annotation tools as a base layer for annotation.

C. Detailed Labeling

Data labeling of each relevant object is done either manually or semi-automatically. Annotators use techniques like 3D bounding boxes, semantic segmentation, instance segmentation, or polylines for lane and road marking. Annotation enables the self-driving systems to learn precise object detection and classification.

D. Quality Assurance and Refinement

The annotations are thoroughly reviewed to check accuracy and consistency. At times, it also involves multi-level human validation and automated error detection tools. Incorrect annotations are rectified to maintain high-quality datasets for self driving vehicles.

E. Handling the Different Challenges

The annotation service providers implement interpolation and estimation techniques to handle challenges like partially visible or overlapping objects. It helps ensure a balance in datasets where some object classes might be underrepresented.

F. Final Integration for Model Training

The final dataset is used to train machine learning models. The models help in autonomous navigation, object detection, and vehicle safety functions. Annotators use feedback from model performance to iteratively improve annotation accuracy.

You can hire expert annotators for video annotation for autonomous vehicles for the right annotation.

Here’s a case study on how Annotation Box is revolutionizing autonomous vehicle training data.

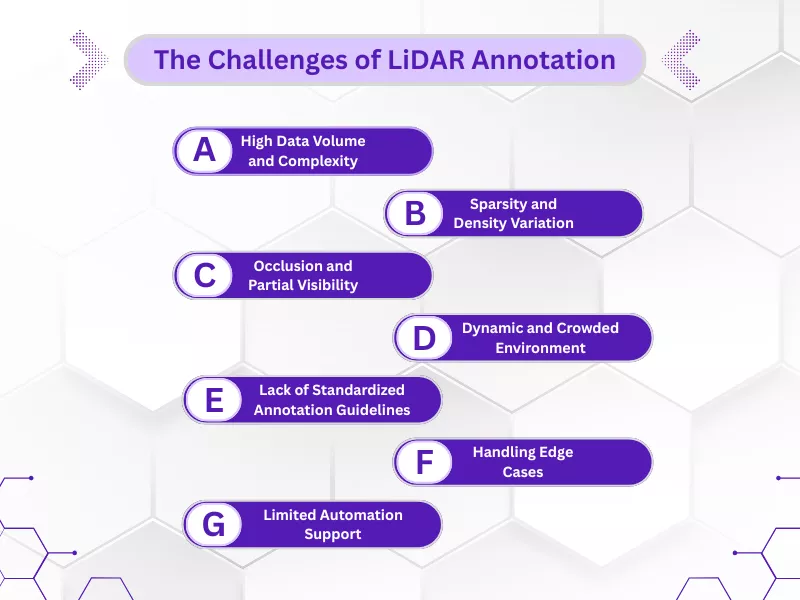

Before we end the discussion, let’s take you through the challenges of LiDAR annotation.

The Challenges of LiDAR Annotation

There are several technical and operational challenges in LiDAR annotation, making it one of the most complex tasks in applications like autonomous driving systems. The following are the major challenges in the process of LiDAR annotation:

A. High Data Volume and Complexity

Each LiDAR scan generates millions of data points, thus forming dense 3D point clouds. It is necessary to employ specialized LiDAR annotation tools to process and annotate such massive data. Managing and visualizing such high-resolution data can be time-consuming and slow down the entire process.

B. Sparsity and Density Variation

LiDAR point clouds often vary in density with distance from the sensor. The objects near the sensors appear dense, while those farther away appear sparse. This makes it challenging to consistently identify object boundaries and shapes. As a consequence, it complicates the object detection in autonomous vehicles and the segmentation process during annotation.

C. Occlusion and Partial Visibility

The objects in 3D environments are often partially hidden by other objects. For example, identifying a pedestrian behind a vehicle can be difficult and often results in incomplete data. Annotators are forced to understand shape and boundaries, relying on the limited information. Consequently, it leads to possible errors or inconsistencies.

D. Dynamic and Crowded Environment

Autonomous driving scenarios involve multiple moving objects, such as cars, bicycles, and pedestrians. The crowded environment leads to overlapping point clouds, requiring intricate labeling decisions to differentiate between closely spaced objects.

E. Lack of Standardized Annotation Guidelines

3D data lacks universal labeling standards. The annotation styles and classification differ across projects. This makes it difficult to maintain consistency, especially for large, distributed annotation teams.

F. Handling Edge Cases

Reflections from shiny surfaces, irregular objects, unique environmental conditions, and other edge cases pose additional challenges for LiDAR data annotation. Annotators need to make subjective interpretations, which may reduce dataset uniformity and model reliability.

G. Limited Automation Support

Despite the availability of AI-assisted tools, current automation techniques still struggle to understand complex scenes, dynamic motions, and varying lighting conditions. This makes human verification crucial for ensuring high-quality annotated LiDAR data.

Final Thoughts,

Training AI models for autonomous car operation is essential. Since these cars encounter various data, it is crucial to implement the right training process. LiDAR annotation is the process of labeling LiDAR data and preparing high-quality training data for self-driving cars.

It is necessary to understand how LiDAR annotation plays a major role in autonomous cars and the process followed to ensure accurate annotations. Signing up with an annotation company offering LiDAR annotation services can be the best way to go forward. The experts can handle the different problems. They are expert annotators and ensure the process is done properly.

Frequently Asked Questions

What types of annotation are used in LiDAR data annotation?

The common LiDAR data annotation techniques include 3D bounding boxes to outline objects, semantic segmentation to label each data point by category, and instance segmentation to differentiate individual objects within the same class.

How do annotation services handle the large volume of 3D data from LiDAR sensors?

The annotation service providers use specialized tools and AI-assisted techniques to efficiently label millions of data points in LiDAR point clouds, ensuring high-quality data labeling at scale.

What are the future trends in LiDAR annotation for autonomous vehicles?

The future trends include increased automation of data labeling, integration of machine learning in annotation services, and improved fusion of LiDAR data with other sensors for improving autonomous vehicle perception and navigation accuracy. The technology continues to be essential for applications that need precise spatial information.

What role does annotated LiDAR data play in advanced autonomous system functions?

Annotated LiDAR data is critical in applications like autonomous navigation, lane detection, path planning, obstacle avoidance, and real-time decision making. It allows autonomous vehicles to respond correctly in dynamic environments.

- Financial Data Annotation: A Complete Guide for Banking and Finance - January 12, 2026

- Semantic Segmentation vs Instance Segmentation: Key Differences - December 18, 2025

- Human Annotation: 3 Edge Cases Automation Misses - December 4, 2025