Every day, a massive amount of user-generated content is uploaded to the internet. In fact, over 1.1 billion pieces of content are shared on social media daily. With such large amounts uploaded daily, it is crucial to ensure that all data is safe on the internet and complies with the rules and regulations of the different platforms.

Content moderation helps ensure that the content uploaded every day is safe for the public and complies with all community rules and regulations. Now, the question is, how do you moderate such massive amounts of content manually? The answer lies in using AI-powered content moderation tools.

However, there are instances where AI has failed to detect harmful user-generated content. So, the confusion remains: which is better, AI or human content moderation?

This blog will explore AI vs human content moderation, list the characteristics of both, outline their disadvantages and advantages, and help you find a solution for your next content moderation project.

AI content moderation can automatically detect offensive language, spam, fake news, and explicit images or videos, and review and filter user-generated content. AI algorithms are trained to identify specific patterns and can process various types of content, such as text, photos, and videos.

The advantage of AI content filtering and moderation by AnnotationBox lies in its ability to process large amounts of data quickly and deliver instant results. Companies use this type of moderation for quick filtering, better efficiency, and scaling up the content moderation process.

What Is Manual or Human Content Moderation?

As the name suggests, human content moderation is the process by which a team of human moderators manually reviews user-generated content to ensure it complies with the platform’s community guidelines. The process involves flagging posts, images, or comments and deciding whether they violate any rules. Unlike AI, human moderators take various factors into account, such as context, tone, and cultural nuances.

All these help in making more accurate moderation decisions. Undeniably, human moderation is slower than AI moderation. However, the fact that humans can interpret subtle meanings, identify sarcasm or irony, and decide based on the bigger picture that AI systems might miss.

That will help you understand the meanings of AI and human content moderation. The content moderation services ensure companies get properly moderated content.

The following section will outline the differences between AI content moderation and human content moderation.

AI vs Human Content Moderation: The Key Differences

Before deciding on which is better, let’s take you through the key differences to help you gain a clear idea.

| Aspect | AI Moderation | Human Moderation |

|---|---|---|

| Speed | Very fast, processes massive volumes in real time | Slower, limited by workforce size |

| Volume Capacity | Handles massive data at scale | Limited capacity, slower processing |

| Context understanding | Low, struggles with nuances, sarcasms, and cultural references | High, excels at subtle cues and context |

| Content Moderation Accuracy | Consistent but misses complex cases | Better accuracy for complex, subjective cases |

| Cost | Higher initial development, lower ongoing development cost | Higher ongoing costs |

| Scalability | Easily scalable to large data volumes | Requires hiring/training, limited scalability |

| Adaptability | Slow to adapt to new trends and language | Quickly adapts to new trends and guidelines |

| Consistency | High consistency in rule application | Variable consistency between moderators |

| Mental health impact | None directly | High risk due to exposure to harmful content |

| Bias | Can inherit or amplify biases from training data | Human subjective bias is possible |

| Transparency | Low, decisions are often less explainable | Higher transparency and accountability |

All of these will help you understand the pros and cons of both AI and humans and how one supersedes the other in various aspects. However, the confusion over who does better content moderation remains. Let’s help you understand that in the following section.

Automated Moderation and Human Moderation: Which One to Choose?

The dilemma for companies is choosing between AI and human content moderation. On the one hand, managing the massive amount of user-generated content requires automated moderation techniques, while on the other, human moderators are needed to address the challenges of false positives and negatives.

The differences have already cleared the air about when to choose one thing over another. For further insights, here are a few parameters that you must take into consideration while making the choice:

A. Speed

The automated moderation process handles large volumes of content quickly. AI moderation tools can scan, flag, and remove inappropriate content in real time. Automating content moderation is beneficial for platforms that have a continuous flow of user-generated content.

B. Scalability

Automated AI tools help in scalable content moderation—their capability to scan thousands of posts, comments, and videos across different platforms. As a consequence, AI content moderation is considered highly effective for platforms with continuous content flow. This is one of the major AI content moderation benefits over human moderation.

C. Consistency and Bias

Automated content moderation ensures consistent enforcement of content policies. However, when it comes to specific nuances, human moderators can be the best choice. There’s a possibility of false positives and negatives when you rely solely on automated moderation. This is why the nuanced judgment of human moderators is necessary.

D. Accuracy and Contextual Understanding

The tools are good at speed, but they can struggle with sarcasm, context, and cultural nuances. Consequently, this will lead to inaccuracies, thus increasing the importance of human moderators. Humans can understand the context, tone, and intent behind content to make the moderation more accurate.

If all of these are adding to the confusion, let us address them with the right approach in the next section.

Human Moderation vs AI Moderation: The Best Way to Moderate Content

Moderating the massive amount of user-generated content on digital platforms, especially social media, cannot be done solely by implementing AI or human moderation. Instead, you need to combine them for effective content moderation. An effective moderation system can review harmful or inappropriate content before they are posted on any digital platform.

Automated tools can scan massive amounts of data and send flagged content to human moderators for review before it’s posted on the web. Companies must use both to achieve desired results and keep their websites and social media platforms free from harmful or disturbing content. It is necessary to understand how AI assists human content moderators for proper implementation.

Reputable companies like Annotation Box offer solutions based on a hybrid approach to ensure content moderation is carried out correctly. You can also avail of image sorting and filtering services to ensure all images abide by the platform guidelines.

Before we end the discussion, let’s take you through a few examples of companies that follow the hybrid approach to ensure effective content moderation.

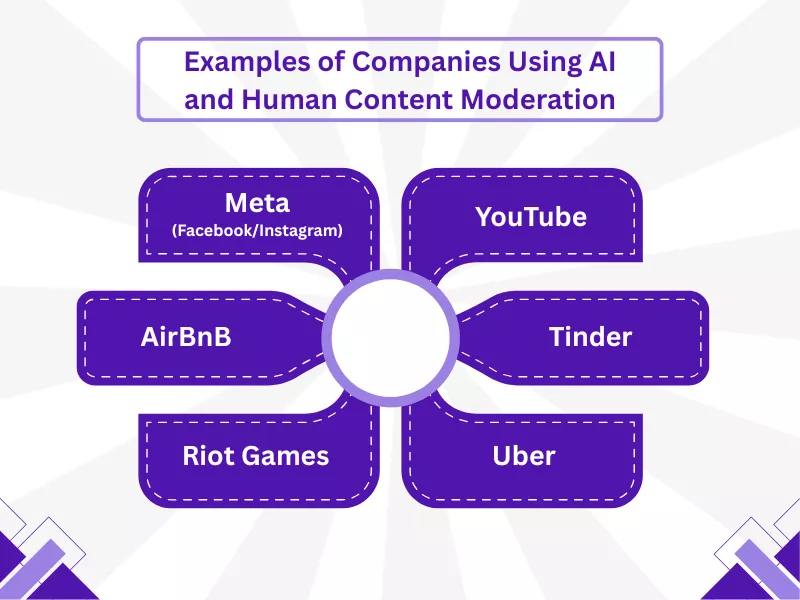

Examples of Companies Using AI and Human Content Moderation

Numerous companies use both AI and human content moderation together to ensure the content posted on their platforms meets the guidelines and is accurately moderated. Here are a few examples:

A. Meta (Facebook/Instagram)

Meta uses AI to scan for violations such as hate speech and nudity, automatically removing clear-cut cases and sending questionable cases to human reviewers. Human moderators then handle cases that need further judgment.

B. YouTube

YouTube uses AI to flag copyrighted content and hate speech quickly. Human reviewers then review the flagged content to make final decisions on removals or other actions.

C. AirBnB

The company uses AI to detect fake reviews and human moderators to review product listings and other content. Two of them together ensure that all the content posted on the site complies with the platform guidelines.

D. Tinder

Tinder combines AI and human moderation to manage user-generated content in order to maintain a safe environment on its platform.

E. Riot Games

Riot Games uses AI to monitor in-game chat and other user-generated content to identify and remove violations. Human moderators review the edge cases and appeals.

F. Uber

The company leverages AI, combining it with human moderation to ensure safety and compliance in user interactions and platform content.

That will help you understand the best practices for AI and human content moderation and make the decision. We recommend using both to ensure your platform is safe from all types of harmful and inappropriate content.

To End with,

The flood of user-generated content on digital platforms demands careful scrutiny before it is posted. With the amount of content growing every day, it is necessary to implement the best possible ways to secure your platform. The emergence of AI has helped control the massive amount of content. Yet, the process is incomplete without the human touch.

In a nutshell, companies implementing moderation solutions prefer a combination of AI and human content moderation for the best results. Despite the key differences between AI and human content moderation, neither can do without the other, and the moderation remains incomplete.

Frequently Asked Questions

Can AI replace human content moderators?

AI can filter content quickly and handle large volumes of online content in real time using AI models. However, this cannot fully replace human moderators. The reason is that human content moderators have the judgment and provide the human oversight necessary for effective content moderation.

Is AI content moderation accurate?

AI content moderation systems are consistent and efficient at removing potentially harmful content, such as hate speech, at scale. However, AI may miss subtle context and cultural nuances, leading to a few false positives or negatives.

How fast is AI content moderation compared to humans?

AI allows content moderation teams to filter content in real time, processing millions of posts and videos faster than any human cloud. AI-powered tools operate 24/7 without fatigue to enable moderation teams to keep user-generated content on platforms in check instantly. The advantages of AI content moderation lie in its speed and 24/7 availability for real-time content moderation.

What are the mental health risks for human content moderators?

Human moderators face exposure to potentially harmful content and controversial material, which can negatively affect their mental health, leading to stress, trauma, or burnout.

How do human moderators and AI work together?

AI and human moderators work together in a hybrid content moderation system. AI models filter and flag content quickly, automatically managing large volumes. The data is then sent to human moderators to review flagged content that requires contextual understanding or complex decisions, ensuring moderation outcomes align with nuanced policies.

Can AI content moderation handle cultural nuances and sarcasm?

AI models struggle to detect cultural nuances, sarcasm, and the subtleties of human speech because they lack deep contextual understanding. Human moderators can better understand such nuances and sarcasm for better moderation.

- AI vs Human Content Moderation: Who Does Better in Content Filtering? - November 21, 2025

- How AI Powered Content Moderation Is Changing Social Media? - September 26, 2025

- How Does AI Improve Content Moderation on Dating Platforms? - July 17, 2025