The audio annotation is the process of converting the audio data into AI language, and it makes AI understand the human voice and answer accordingly. The process in this annotation involves adding labels to elements like human speech, background noise, emotions, or dialects within an audio file.

Experts annotate audio datasets and create structured training data by employing different types of audio annotation techniques such as audio transcription, audio classification, and sound annotation. These annotated data are used later for model training in machine learning and natural language processing (NLP) tasks.

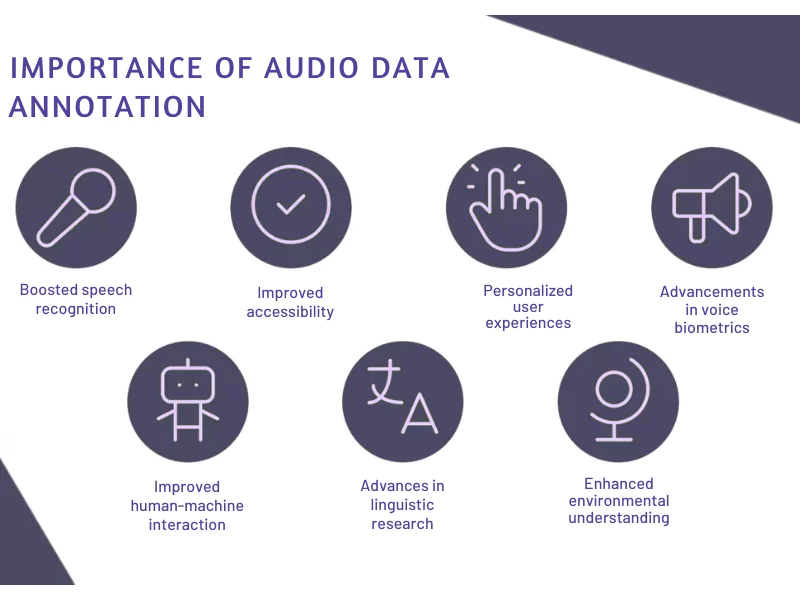

Proper audio annotations are important for speech recognition systems, speech-to-text applications, virtual assistants, and AI-powered chatbots. An annotator ensures that each annotation task produces reliable datasets with high-quality data annotation and clear annotation guidelines.

AI models can understand accents, dialects, emotions, intent, and even background noise using these datasets. Different Types of Audio Annotation are employed in healthcare voice tools, customer support, and security systems. Other AI applications are also managed by the annotated audios, where audio transcription and classification are essential. In short, Audio annotation transforms raw audio recordings into meaningful training data for powerful machine learning models.

Transcribe or Label Audio Data: Difference Between Audio Transcription vs Annotation

| Audio Transcription | Audio Annotation |

|---|---|

| Converting speech in an audio file into text | Labeling and tagging audio data for AI and machine learning. |

| Speech-to-text recognition for readable transcripts. | Audio classification, sound annotation, and intent tagging for more innovative AI models. |

| A text transcript of spoken words | Annotated data with labels (speakers, emotions, background noise, dialects). |

| Subtitles, documentation, and records. | Training AI models, virtual assistants, and NLP systems. |

| Limited role in speech recognition. | Essential for model training in artificial intelligence and machine learning. |

Different Types of Audio Annotation Used in AI and Machine Learning

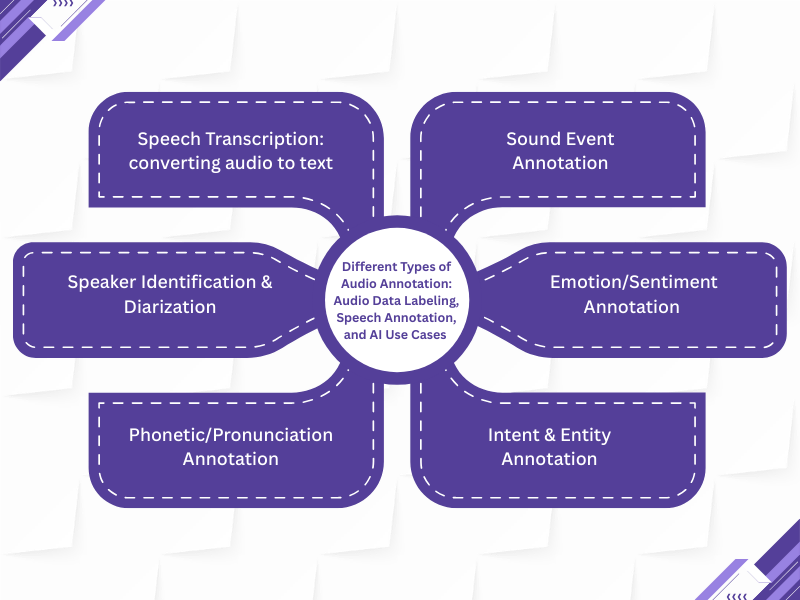

- Speech Transcription: Converting audio to text enables machines to process spoken words into written form for analysis or documentation, and is the foundation of audio annotation.

- Sound Event Annotation enables systems to identify sounds such as alarms, music, and non-human speech, ensuring they can distinguish environmental sounds for security, healthcare, and multimedia applications.

- One of the major speech annotation types is the Speaker Identification & Diarization, which helps to differentiate voices during conversation, and it labels different speakers. This annotation is making the data more structured and practical.

- Emotion/Sentiment Annotation captures the human aspects by tagging mood and tone identifiers, indicating whether speech conveys emotions such as happiness, anger, or sadness, thereby enhancing applications like customer service AI.

- Meanings of language are captured with Phonetic/Pronunciation Annotation has accents, pauses, and stress. It helps improve annotation for speech recognition for diverse populations.

- Intent & Entity Annotation extracts the goals of users and key details from conversations, making the chatbot and voice assistance more intelligent.

Together, these annotation methods power smarter, human-like AI interactions.

What Are the Different Types of Audio Annotation for NLP Projects?

There are Multi-speaker audio annotations available for different types of projects

Speech Transcription (Converting Audio to Text)

- It transforms spoken language into written text.

- It is one of the most widely used audio annotation services, particularly in building chatbots, transcription apps, and virtual assistants.

Sound Event Annotation

- Specific parts of an audio recording are labeled in this type, such as footsteps, alarms, traffic sounds, or dog barks.

- They are used in surveillance, IoT devices, and smart home systems.

Speaker Identification & Diarization

- This method of annotation concentrates on multi-speaker audio.

- It is useful for call centers, legal transcriptions, and meeting analysis.

Emotion and Sentiment Annotation

- This kind of annotation is useful to detect emotional tones such as happiness, anger, or sadness.

- Services such as customer service AI, healthcare chatbots, and mental health monitoring tools need these services.

Phonetic & Pronunciation Annotation

- Concentrates on labeling specific parts of an audio that highlight pronunciation and accent differences.

- In language learning apps, music classification, and improving speech recognition technologies, these annotations can be used.

Intent & Entity Annotation

- This annotation helps AI understand what the speaker wants to achieve (e.g., booking a flight, asking a question).

- This type is essential in virtual assistants, conversational AI, and customer support automation.

Audio Labeling and Annotation Project Applications

Audio Annotation in Finance

The best type of annotation for a finance project is Speech Transcription (Converting Audio to Text). The reason is that your project will be handling sensitive audio and speech data such as investor calls, compliance discussions, and customer interactions. You will be able to create a structured document with every detail within an audio recording by using an audio annotation tool to transcribe or label audio data.

This data annotation process allows annotators to listen to the audio and create high-quality transcripts that serve as data for machine learning, fraud detection, and compliance monitoring.

Audio Annotation in Healthcare

The valuable type of annotation for your healthcare project is Emotion and Sentiment Annotation, as it helps detect stress, anxiety, or depression in audio and speech data collection. The speech transcription is also important for transcribing or labeling audio from doctor-patient conversations and clinical notes. Your healthcare project can annotate audio recordings to ensure accurate documentation of diagnoses and treatments. Healthcare generates large volumes of data, and audio annotation involves adding structure to audio content like consultations, emergency calls, or therapy sessions.

Audio Annotation in Legal

Suitable type of annotation in a legal project is Speech Transcription, as legal professionals must transcribe or label audio data from depositions, trials, and witness statements. Your project can annotate audio recordings and convert audio or speech into reliable text for legal use with the help of an audio annotation tool. The quality of the data is enhanced, ensuring accuracy in case preparation. The Speaker Identification & Diarization is also useful for your project, as it separates voices within an audio recording, helping to attribute statements in audio and video files clearly.

Audio Annotation in Insurance

The most effective type of annotation in the insurance sector is Speech Transcription, as it helps your project transcribe or label audio data from customer calls, policy discussions, and claims interviews. Insurers can use an audio annotation tool to create structured documentation from audio or speech interactions by annotating audio recordings. Thus, ensuring the quality of data for decision-making.

Which Type of Audio Annotation is Best for Speech Recognition Models: Use Case

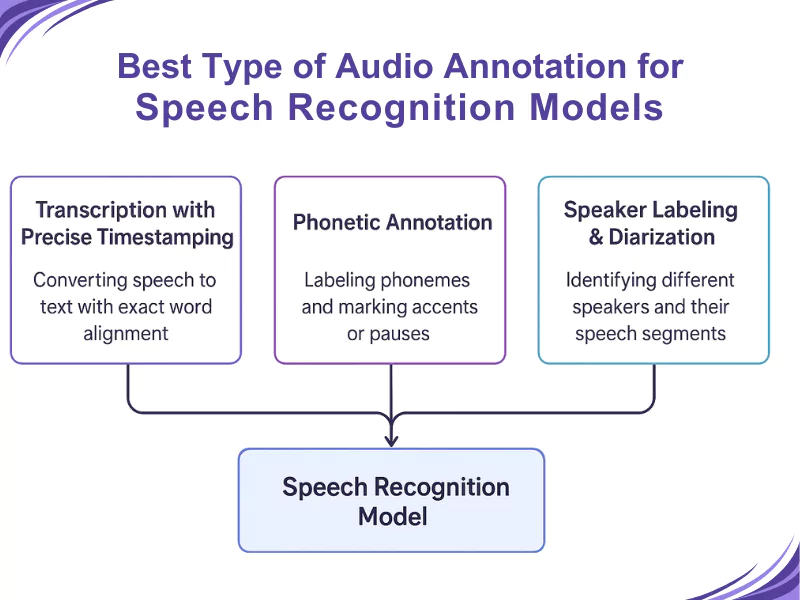

1. Transcription with Precise Timestamping

Transcription, also known as speech-to-text annotation, plays a crucial role in every automatic speech recognition (ASR) system. This provides training data for AI models by converting spoken words into text. The transcription becomes more potent by adding word-level timestamps, as each word is tied to its exact location in the audio recording. The real-time transcription, forced alignment, and phonetic analysis are improved by this precision alignment.

2. Phonetic Annotation

Phonetic annotation goes deeper while transcription captures words, and along with markers for accents, pauses, and stress, it labels phonemes (the smallest sound units). The speech recognition model accommodates dialects and pronunciation variations. It makes them more inclusive and accurate across different speakers and languages.

3. Speaker Labeling & Diarization

Interactions in everyday situations often feature overlapping dialogue among several participants. This is addressed through speaker labeling and diarization to identify “who spoke at what time. The annotation helps identify different voices, making the conversation follow more easily, and also creates high-quality training data when used together. Due to this, AI models can easily understand natural speech and accommodate different accents.

Benefits of Selecting the Right Type of Audio Annotation Services for Machine Learning Models

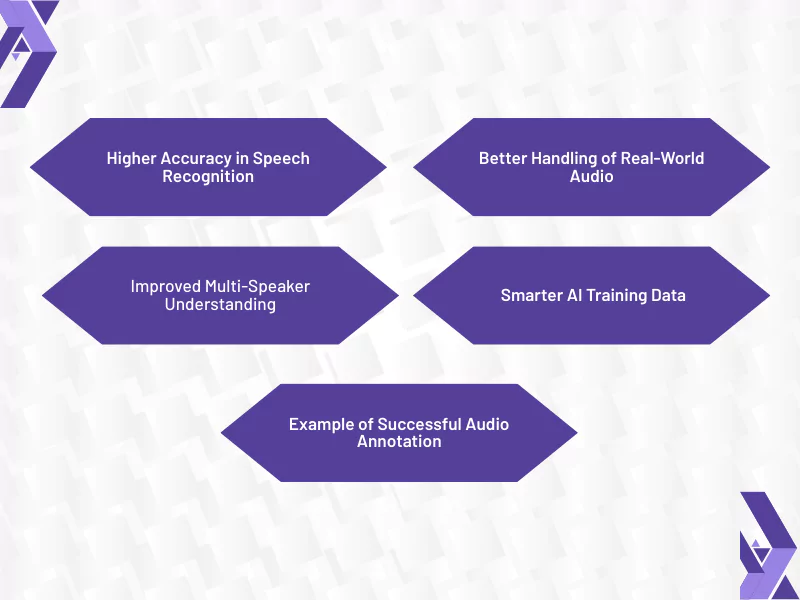

✅Higher Accuracy in Speech Recognition

- Using correct audio annotation ensures AI can interpret human speech with fewer errors.

- The quality of speech-to-text transcription is enhanced for subtitles, chatbots, and virtual assistants.

✅ Better Handling of Real-World Audio

- The AI models become more inclusive as Phonetic annotation captures accents, pauses, and dialects.

- The distractions are filtered by the Background noise labeling in real-world recordings.

✅ Improved Multi-Speaker Understanding

- Speaker labeling and diarization allow AI to identify “who spoke when,” vital in call centers and meetings.

✅ Smarter AI Training Data

- Audio datasets become well-structured by employing the correct annotation tools and services.

- The high-quality annotated data support reliable machine learning and audio classification.

✅ Example of Successful Audio Annotation

We can take the example of Google Assistant which is a real-world success story. The company’s recognition has been enhanced across multiple dialects and noisy environments.

Key Reasons AnnotationBox Leads in AI Training Data Solutions

AnnotationBox boasts 95%+ accuracy and is a trusted leader in data annotation and labeling services, powered by a skilled workforce of over 1,000 experts. What sets us apart is our comprehensive service portfolio. Our portfolio covers Data annotation services, image annotation, video annotation, text annotation, audio annotation, medical annotation, geospatial labeling, and more. We deliver scalable, high-quality solutions with six years of proven expertise in industries like healthcare, autonomous vehicles, retail, robotics, and finance.

We combine annotation tools, such as bounding boxes and 3D cuboids, with human-in-the-loop accuracy to create accurate training datasets for AI and ML models. Our clients have secure data handling (GDPR & SOC 2 compliant) and rapid project turnaround. Our client also has got unmatched precision in applications such as speech recognition, NLP, sentiment analysis, and medical imaging. We have been the best partners for building a successful AI Model, with over 450+ successful projects and a reputation for excellence

Conclusion

Audio annotation is crucial for training AI and NLP systems, as it enables the accurate annotation of audio data for tasks such as phonetic labeling and speaker diarization. Annotators listen to the audio to assess annotation accuracy, which is particularly important in speech recognition and virtual assistant applications.

Different types of audio recordings are handled by Manual annotation and data labeling approaches. Audio annotation is used across various industries, as it helps improve model performance. Choosing the right method is crucial, as inadequate labeling can lead to increased safety concerns. For a reliable and effective AI system, overall annotation is essential.

Frequently Asked Questions

What are the various forms of audio annotation utilized in AI?

The numerous forms of audio annotation are transcription, phonetic annotation, speaker diarization, and emotion or sentiment tagging.

Why is it crucial to select the appropriate type of audio annotation?

It is crucial to choose the correct annotation type as it provides accuracy in speech recognition, dependable machine learning results, and improved AI interactions.

How is audio annotation different from audio transcription?

The audio annotation labels and tag elements in an audio file go beyond just the words. On the other hand, audio transcription transforms spoken words in an audio file into written text.

Can one audio file have multiple types of annotations applied to it?

Yes, a single audio file can have multiple types of annotations applied to it.

What role does audio quality play in the accuracy of audio annotation?

The quality of audio affects the accuracy of audio annotation. Words are easily recognized due to a clear and high-quality recording. In contrast, lower-quality audio results in errors, missed details, and imprecise timestamps.

What is the difference between speaker identification and speaker diarization?

The speaker diarization ensures that the speech-to-text transcripts are readable and analyzable for both machines and humans. Conversely, speaker identification plays a role in recognizing and identifying the speaker.

- Comparing Manual vs Automated Image Annotation: Which Is Better in 2026? - December 29, 2025

- How to Master Audio Data Labeling for AI Accuracy in 2026 - November 18, 2025

- The Importance of Data Security in E Commerce Audio Annotation - October 30, 2025