Human Annotation is the backbone of today’s AI and Machine Learning Systems. While AI models can process huge amounts of data but they still rely on humans to guide them. If the labels are inconsistent or messy, even the most advanced models can make wrong predictions. That’s where human annotators step in; they are providing clean, accurate labels that can boost model performance from 60% to over 95%.

Think of annotators as the teachers of AI, giving it the right answers so it can learn correctly. And this matters because an AI model is only as smart as the data it’s trained on. In fact, research shows that 42% of automated labels still require human correction, especially in tricky or ambiguous cases.

In this blog, we’ll explore why human annotation and human-in-the-loop workflows are essential for handling complex AI edge cases and why they remain irreplaceable in building reliable, high-accuracy AI systems.

Data Annotators are essential contributors to the development of Machine Learning and AI systems. Data Annotators manually label text, images, audio, and video so that AI algorithms can understand the patterns and improve accuracy over time.

In other words, annotators from companies like AnnotationBox review and validate data to ensure consistency and reliability, therefore, acting as the crucial human link between raw data and intelligent models. Furthermore, without annotated datasets, AI would be unable to learn effectively, no matter how advanced the model.

Why Is Human Annotation Important for AI?

Here is the detailed breakdown of why human annotations are needed:

1. Improving Data Quality

Human Annotators ensure AI models are trained on accurate, consistent, and context-aware data. Human Annotation reduces error and bias while capturing nuances such as emotional tone that AI still struggles to interpret. For tasks like sentiment analysis, human judgment is essential because models require labels that reflect real linguistic and contextual understanding.

2. Managing Ethical and Complex Scenarios

Secondly, a human annotator helps to maintain ethical integrity in sensitive areas such as healthcare or autonomous vehicles. Human annotators play a crucial role in maintaining Quality Assurance in Medical Annotation and provide high-quality data in other sensitive areas. Human Annotation ensures that datasets are fair, representative, and unbiased. They also resolve unclear cases, such as dialect variations or morally complex situations that require thoughtful interpretation.

3. Handling Outliers and Edge Cases

AI systems often fail on rare or unusual events, and human annotators can identify and label these outliers, such as unexpected road hazards or extreme weather conditions. This further helps AI models perform reliably in real-world, unpredictable environments.

4. Supporting Specialized Domains

Domain experts in sectors such as law, finance, or biology provide accurate annotations for niche subjects. The annotation box’s expertise ensures that AI models learn from high-quality, detailed, and technically correct data.

5. Refining and Enhancing Models

Human-in-the-loop Feedback is vital for continuous improvement and avoiding rare data annotation issues. Experts review model predictions, correct the mistakes, and provide insights that help to refine the performance.

3 Edge Cases Automation Misses

Edge cases in machine learning annotation automation misses are the rare, unusual, or unexpected data that automated labeling tools struggle to handle. These cases fall outside the patterns a model has learned, causing incorrect labels, low confidence, or unpredictable behavior. Here we will discuss 3 edge cases automation often misses:

1. Contextual or Ambiguous Annotations

An automated system frequently falls short when interpretation depends on the deeper context. Even the most advanced models can misread sarcasm, irony, or ambiguous hints because AI tools operate on learned patterns instead of true intent. In computer vision, this challenge arises in images with overlapping objects, reflections, or nuanced gestures. These are the situations where Human-in-the-loop annotation comes in.

The Reason Why Automation Fails Here

Automated labeling works by detecting patterns. As a result, a sentiment model may incorrectly interpret sarcastic phrases like “Oh, you came so early” as positive. In another case, a vision model might mistake a toy gun for a real weapon. In both cases, the system reacts to surface features, not meaning.

Here, Human annotators bring the cultural understanding, common sense, and situational awareness that keep labels aligned with real-world interpretation.

Where Human Annotation Makes a Difference

Human provides clarity where automated systems are prone to misjudgment. Automation fails to decipher tone, intention, and subtle context. Human Annotation catches nuances that determine whether a label is accurate or potentially misleading. It’s vital for preventing minor semantic errors from becoming major sources of bias in deployed models.

Models still struggle with contradictions, idioms, humor, and culturally specific experiences. Here, human reasoning is required to interpret correctly. Without human oversight, such misinterpretations become embedded in model weights and can cause persistent misclassification issues later.

2. Rare or Out-of-Distribution Data

Automated labeling systems often fail when they encounter rare or unfamiliar data, which they didn’t see enough of during training. Hence, it’s quite obvious that Machine learning fails to handle them correctly. In real-world applications, these edge cases often yield inconsistent outputs and unexpected false-positive results due to unfamiliar signals.

Why Rare Data Disrupts Automated Annotation

Pre-labeling models and foundation-based annotation rely heavily on patterns learned during training. When they encounter unfamiliar things, such as uncommon objects or a new language variant, they can’t interpret them properly. This further lends to a highly confident, incorrect label that can be extremely risky for downstream systems. As the model can’t detect its own uncertainty, these low-frequency mistakes can pass through automated quality checks unnoticed.

How Does Human QA Bring Stability?

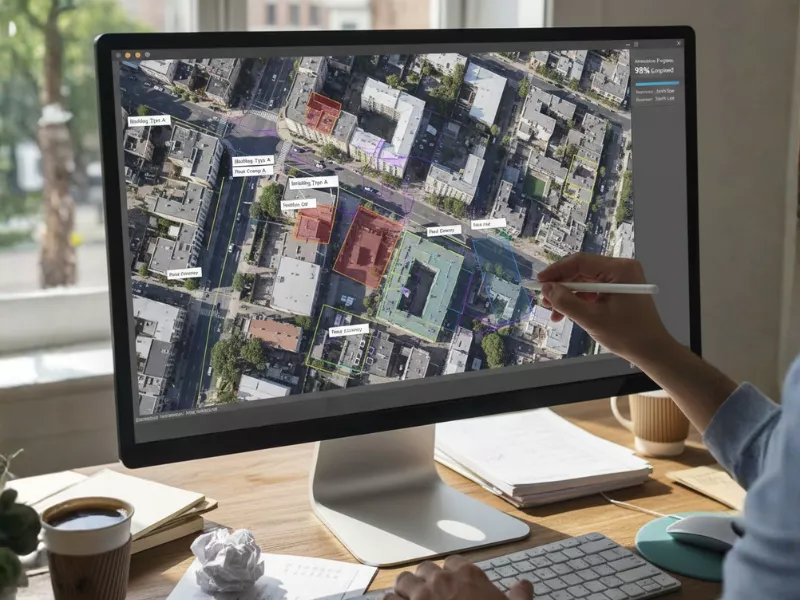

Human annotations are better at handling abnormalities and unusual data. Whether it’s identifying cracks in a new material type, interpreting noisy sensor output, ot labeling distinctive satellite imagery, humans can spot misalignments that automated systems miss.

In addition, their insight helps preserve the accuracy of the data set and trains the machine learning model when a distribution shift occurs, a common reason models degrade once deployed.

Out-of-distribution inputs are unavoidable in machine learning. Automation works well for routine patterns, but only human oversight can detect the “unknown unknowns” and stop silent model failures before they reach production.

3. Domain-Specific or Fine-Grained Labels Requiring Expertise

Automated labeling tools often struggle when the tasks require expert knowledge instead of simple pattern matching.

A model can recognize shapes, objects, or tone, but it can’t understand meanings that depend on deep, domain-specific context like diagnosing a rare issue in a medical scan, labeling complex contract clauses, or spotting tiny defects in factory images.

Why Expert Knowledge Still Matters?

Generally, AI models work well for common tasks, but a high level of accuracy is needed for specialized tasks. For example, a medical model may miss a small sign that a trained radiologist would notice.

Similarly, in legal documentation, “Consideration” clauses may be tagged differently across contract types. These kinds of mistakes build up over time and can lead to serious real-world problems.

How Humans Fill the Gap

Domain experts ensure that labels are correct and meaningful. They understand the deeper context, keep annotations consistent, and train the model with proper guidelines to improve it.

This makes the dataset more accurate and easier to trust, and it is especially important in fields with strict rules or safety risks. Automation with human ensure precision. In high-risk areas like healthcare or defense, missing small details can cost far more than investing in human oversight.

Top 6 Reasons Why Fully Automated Labeling Still Fails

Human annotation is essential because automated annotation misses subtle, unusual, or unpredictable edge cases. In this section, we’ll explore why human annotation is critical for AI edge cases and how they are Solving Data Edge Cases

Improving Data Quality

Strong AI systems depend on strong data. That’s where human annotators make the most significant difference. They turn messy, unstructured information into clean, reliable training datasets by removing errors, fixing inconsistencies, and mitigating bias. Therefore, improving the data quality.

While machines can process data fast, only humans understand context. Whether tagging product reviews or labeling social media posts, humans catch things that AI often misses, such as cultural expressions, humor, and emotional tone. This improves the reliability of any ML model and helps it perform better in real life. That’s why humans solve edge case annotation challenges better.

Handling Ethical and Sensitive Situations

In fields such as healthcare, autonomous driving, or law, ethical decisions matter. Human annotators work on Unlabeled Data in Machine Learning and make judgments when artificial intelligence can’t.

They ensure the data is fair, unbiased, and representative. They can evaluate accents, cultural differences, and sensitive conversations with care, and this is something machines struggle to do responsibly.

Managing Outliers and Uncommon Cases

AI performs best with common patterns but struggles with rare or unexpected events. Human annotators identify these unusual cases and label them accurately.

For example, in self-driving datasets, annotators don’t just label traffic signs, cars, and pedestrians; they also highlight rare situations such as animals crossing, construction zones, unusual shadows, or fog.

These scenarios help the model integrate safer behavior when real-world conditions become unpredictable.

Supporting Specialized and Expert-Level Data

Some tasks require expert knowledge. That’s why industries like medicine, finance, law, and biology rely on trained professionals through specialized data labeling or data annotation services.

Experts ensure that technical information in medical scans, legal clauses, and financial reports is interpreted correctly so AI systems make accurate and trustworthy decisions.

Continuous Refinement With Human-in-the-Loop Feedback

AI models improve through continuous learning. Human experts review predictions, correct mistakes, and guide the system over time. In medical imaging, for example, specialists compare the AI’s result with their own diagnosis, thus, helping the model learn disease patterns more precisely. This human-in-the-loop approach helps empower AI systems and steadily increases their long-term performance.

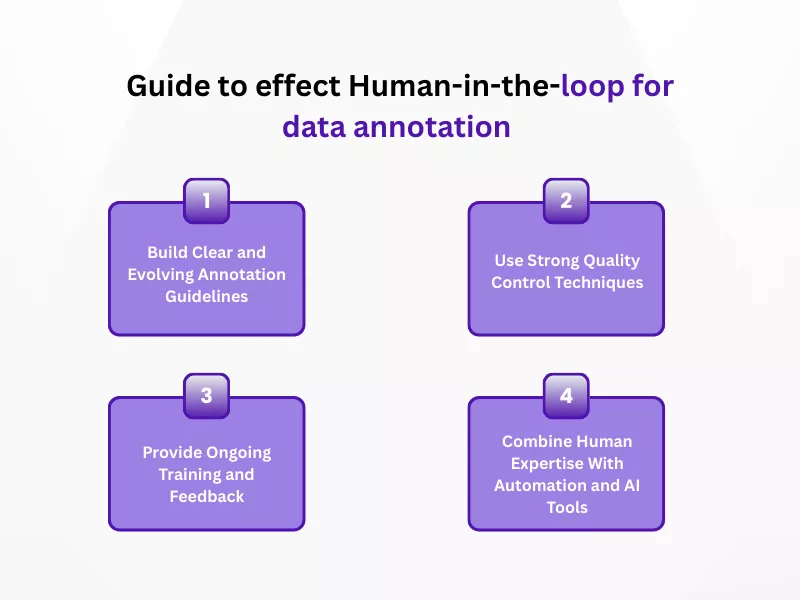

Guide to Effect Human-in-the-loop for Data Annotation

1. Build Clear and Evolving Annotation Guidelines

Strong annotation guidelines prevent confusion and ensure every annotator interprets data the same way. Treat these instructions as a living document and update frequently based on annotator feedback, new use cases, and evolving gen-AI challenges, where unclear instructions quickly lead to inconsistent or biased outputs.

2. Use Strong Quality Control Techniques

High-quality annotations require structured checks at every stage. Practice steps like Inter-Annotator Agreement, random audits, and golden datasets help teams spot errors early, measure consistency, and refine unclear areas. These quality controls ensure reliable, accurate data across large and complex projects.

3. Provide Ongoing Training and Feedback

Human annotation works best when treated as a continuous learning loop. Regular training, refreshers, and practical examples help annotators stay aligned with guidelines and tools. Encouraging collaboration and knowledge sharing strengthens consistency and builds a more skilled and confident annotation team.

4. Combine Human Expertise with Automation and AI Tools

Automation can streamline repetitive annotation tasks, reduce workload, and speed up delivery. AI-powered suggestions, error detection, data prioritization, synthetic data generation, anonymization, and noise reduction all support annotators. Therefore, allowing humans to focus on nuanced decisions while machines handle routine tasks efficiently.

Skills Required to Be an Effective Data Annotator

To deliver high-quality labeled datasets that power accurate AI and machine learning models, data annotators with technical, domain expertise, and soft skills are required. Below are the essential skills required to be an effective data annotator:

Soft Skills Required for Data Annotation

- Attention to Detail and Accuracy

- Ability to Follow Guidelines

- Critical Thinking and Problem-Solving

- Time Management and Organization

- Communication Skills

- Focus, Patience, and Perseverance

- Adaptability and Continuous Learning

Technical Skills Needed for Data Annotators

- Basic Computer Literacy

- Familiarity with Annotation Tools

- Domain Expertise

- Basic Programming Knowledge

Why Choose Annotation Box?

We deliver best-in-class annotation services to train and develop Machine Learning and Deep Learning models through a human-in-the-loop approach. Below, we have discussed why we can be the best solution for you:

- 500+ Employees

- 1000+ Trained Experts

- 95%+ Accuracy

- 50+ Happy Clients

- 450+ Successful Projects delivered on time

Conclusion

The future of AI is not fully automated, but it is human-AI collaboration, where skilled annotators play a critical, irreplaceable role in ensuring accuracy, safety, and performance. Organizations that choose skilled annotators or partner with reliable annotation companies will build stronger, more reliable, and more ethical AI systems.

Frequently Asked Questions

What is human annotation in machine learning?

Human annotation in machine learning means people manually label or tag data, such as text, images, audio, or video, so that AI models can learn from it. Humans add the correct meaning, context, and categories to the data, which helps the model understand patterns and make accurate predictions.

What are common challenges in human annotation?

- Human annotation faces challenges like inconsistent labeling, subjective interpretations, fatigue from repetitive tasks, and varying domain expertise.

- Annotators may misunderstand guidelines, struggle with ambiguous data, or produce errors under time pressure.

- Ensuring quality, maintaining speed, and aligning multiple annotators’ judgments are ongoing issues.

Scaling teams, training, and continuous quality checks also add complexity.

What Is Bias in Human Annotation?

Bias in human annotation occurs when personal beliefs, assumptions, or cultural backgrounds influence labeling decisions. This leads to skewed or unfair data that misrepresents real-world patterns. Bias can appear through stereotypes, selective attention, or misunderstanding of context. It impacts model fairness and accuracy, making it crucial to use clear guidelines, diverse annotators, and rigorous quality control.

Is data annotation a good career option for remote workers?

Yes. Data annotation is a strong remote career because most data labeling solutions allow flexible schedules and cloud-based work. As more companies develop AI-driven tools from chatbots to robot assistants, the need for reliable annotation grows, making it a promising long-term option.

Do data annotators need certification to work on AI projects?

No certification is required. Most roles teach beginners how to label data to train AI systems and how to follow precision metrics. As skills grow, workers can move into expert data annotation roles where deeper interpretation and human input matter.

Which industries need the most data annotation today?

Industries with the highest demand include:

- Healthcare (medical images, diagnostics)

- Autonomous vehicles (lane markings, objects, traffic signals)

- Finance (risk modeling, document tagging)

- E-commerce and agriculture (product tagging, crop monitoring)

These sectors need high-quality labels to improve AI performance and ensure accurate search results.

How does poor annotation quality affect machine learning performance?

Poor labeling adds noise to the database, reducing accuracy across ML projects. It can confuse models during training, making them misread images, misjudge tone in sentiment analysis, or fail to respond correctly in chatbots. Strong annotation preserves data quality and improves every downstream metric.

What types of data require the most human involvement?

High-risk or specialized datasets rely heavily on humans, such as:

- Medical imagery

- Legal or financial documents

- Manufacturing defects

- Self-driving computer vision edge cases

- Multilingual text data

These require careful review to preserve accuracy and context.

Can AI fully replace human annotators in the future?

No. AI can automate simple tasks, but humans remain essential for edge cases, judgment-heavy decisions, and refining model outputs. The future is a hybrid model where AI handles speed, and humans handle precision, insight, and continuous human feedback.

How do companies ensure high-quality annotation at scale?

Companies use layered review systems, clear labeling guidelines, trained team members, and human-in-the-loop checks. This workflow scales efficiently while ensuring the data labeling solutions stay precise, consistent, and ready for production-grade AI systems.

- Financial Data Annotation: A Complete Guide for Banking and Finance - January 12, 2026

- Semantic Segmentation vs Instance Segmentation: Key Differences - December 18, 2025

- Human Annotation: 3 Edge Cases Automation Misses - December 4, 2025