The amount of user-generated content on social media has increased rapidly over the years. That created the need for a more rigorous content screening and moderation process. With millions of users posting on Facebook, X, Instagram, and other social media platforms every day, the need to automate content screening has increased manifold.

AI powered Content Moderation Service for Children’s Websites has found relevance presently as it helps browse through the massive amount of content and automatically decide on what can be kept, what should be taken down, and the ones that needs human review.

This blog will dive deep into AI powered content moderation to help you learn how it is changing social media and making the platforms safe for all users.

Social media moderation went through a lot of changes to reach its present form. The traditional system of identifying harmful content or inappropriate content involved human moderators only.

Manual review used to be time-consuming and costly. Further, the content moderators found it difficult to review such a massive amount of content on social media. Automated content moderation process using artificial intelligence, machine learning, and natural language processing (NLP) is faster and can manage large volumes of content more efficiently.

However, the need for human moderators has not died down. In fact, the modern content moderation systems rely on a hybrid approach. It combines both AI and human moderators to manage the growing amount of online content, AI-generated content, and potentially harmful or inappropriate content.

Simply put, the need to manage the growing amount of data led to the transition from traditional to modern content moderation using AI.

What Are the Different Types of AI Content Moderation?

The different types of content moderation are broadly divided into two categories: moderation by method and moderation by timing. Here’s a look at what falls under each of these categories:

|

Moderation by Method |

Moderation by Timing |

|

Human moderation |

Pre-moderation |

|

Automated (AI) moderation |

Post-moderation |

|

Hybrid moderation |

Reactive moderation |

|

Distributed moderation |

Proactive moderation |

Here’s a brief explanation of each of these types to help you understand them better:

A. AI in Content Moderation by Method

The classification is focused on who or what is performing the moderation.

- Human Moderation

This is the traditional approach, where human content moderation teams review content. The type is very effective for nuanced cases, like sarcasm, satire, and cultural context. However, the type is not scalable and can be stressful for human moderators who are required to review graphic or potentially harmful content.

- Automated (AI) Moderation

In this type, AI and machine learning systems are used to detect and flag inappropriate content automatically. AI tools have proved to be effective for handling massive volumes of content at speed and scale. AI moderation can help identify violations like spam, nudity, and hate speech using NLP for text and computer vision for images and videos.

- Hybrid Moderation

This is the most effective and common type of content moderation. It combines both human and AI moderation techniques for better accuracy of moderation. AI can scan large volumes of content and send the ones that need further review to human moderators before publishing the content.

- Distributed Moderation

This type of moderation is also known as community-based moderation. It shares some review responsibilities with the users. The social media platforms allow users to flag inappropriate content, and then the report is reviewed by the central content moderation team or other trusted community members. While the method is scalable, it faces the risk of coordinated abuse or ‘pile-on’ reporting.

B. Moderation by Timing

The classification is focused on when the moderation takes place with respect to the publication of the content.

- Pre-Moderation

The content is reviewed and approved by a moderator before it is published. The process provides the highest level of control. It is mostly used for platforms for children, e-commerce review sites, or forums that prioritize safety over speed. The disadvantage here is that it takes a lot of time to publish content on the site.

- Post-Moderation

The moderation happens after the content goes live on social media platforms. This helps in real-time engagement and is very common for social media platforms. The challenge is that harmful content will be reviewed after it is published on the platform. The aim is to remove such pieces of content as fast as possible.

- Reactive Moderation

The type of moderation completely relies on user reports. Content is reviewed after a user flags it for potential violation. The method is low-resource, but it is slow to address potentially harmful content that is not addressed in the beginning.

- Proactive Moderation

Proactive moderation is a highly advanced method that is often performed with the use of AI. In this method, the content is scanned automatically and removed before it gets published or reported. AI can identify and block a video if it falls under the platform’s prohibited content policies.

Companies often avail content moderation services to keep things on track and keep their pages free from inappropriate or harmful content.

How AI-Powered Content Moderation Works?

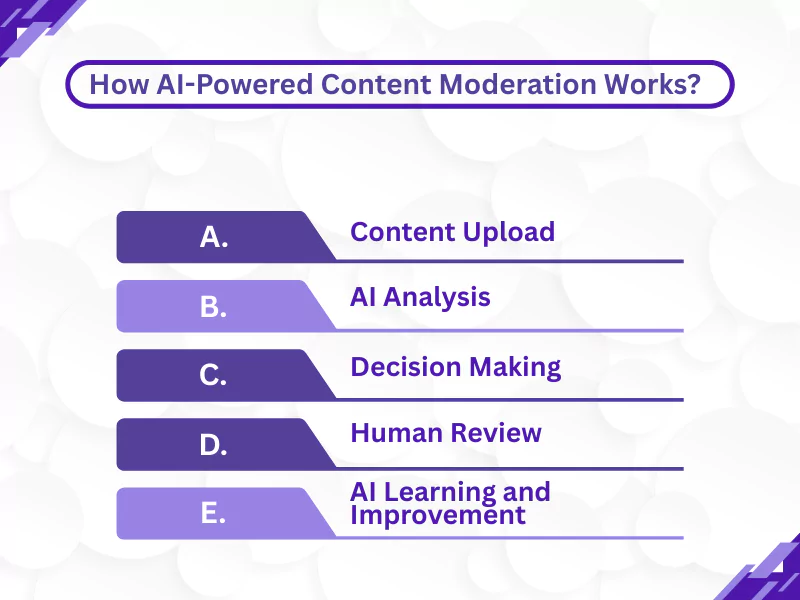

AI powered content moderation generally follows five steps. The process might vary depending on the type of moderation. The following are the general steps for content moderation powered by AI:

A. Content Upload

The process starts when a user uploads the content to a particular platform. The content can be of any form, such as social media posts, photos, user-generated videos, reviews, or comments.

B. AI Analysis

The AI systems then analyze the uploaded content using various techniques, such as:

- Machine learning (ML): Machine learning models are trained to identify patterns in the content associated with violations of platform rules.

- NLP: It is used to analyze text for understanding the context, sentiment, and to identify issues like hate speech or spam.

- Computer vision: This is used to analyze images and videos. It can identify inappropriate visuals, objects, or activities.

C. Decision Making

The AI models determine if the content is compliant or needs further action after the analysis.

D. Human Review

Human review is necessary when the content is flagged as harmful or inappropriate. The moderators review the content to determine whether the flag raised by AI tools is appropriate or not, go through the context, and make final content moderation decisions.

E. AI Learning and Improvement

The feedback provided by human moderators helps the AI models learn and improve themselves. It helps refine AI algorithms for a better understanding of problematic content. The process helps improve their accuracy over time.

The entire process depends on how well the AI models are trained. Businesses often avail data annotation services to get the right training data for creating perfect AI and ML models.

What Are the Benefits of AI Content Moderation?

AI content moderation offers a lot of benefits. The following are the key benefits of using AI in content moderation:

A. Increased Scalability

AI can scan and process a massive volume of user-generated content and flag the content that violates community guidelines. This is one of the major benefits of using generative AI to solve content moderation problems, where manual moderation is not appropriate.

B. Increased Speed

Using AI can help identify and act as soon as users post content to these communities. It helps in flagging and removing harmful or inappropriate content. Consequently, it helps in protecting users and the platform’s reputation.

C. Cost-Effective

Automating content moderation using moderation tools reduces the need for a large human moderation team. It helps save a lot of operational costs.

D. Improved Accuracy and Consistency

AI applies content moderation policies that abide by the guidelines to ensure uniform enforcement and reduce human error. It helps in fairer and more consistent outcomes and also defines how AI improves content moderation on social media platforms.

E. Improved User Experience

Generative AI models can help in the quick removal of harmful content, like hate speech, spam, and graphic violence, thus creating a safer and more positive environment for users. Consequently, it enhances user satisfaction and retention.

F. Reduced Human Moderator Exposure

AI reduces human exposure to potentially disturbing and harmful content. Considering the high volume of user-generated content, such harmful content can be stressful and affect their mind. AI content moderation is essential for human moderators to focus on more complex issues.

G. Multi-Lingual Support

Training AI-powered content moderation tools can help it to understand and moderate content in multiple languages. It helps maximize the reach and safety of global platforms.

H. Actionable Insights

Companies use AI to moderate content in order to get actionable insights into user behavior and content trends. The tools use metrics like toxicity scores, sentiment analysis, and spam scores to help platforms understand and improve their online environment.

Automated moderation systems have made it easy for different platforms to assess and analyze their online environment. The systems can perform video moderation as well as understand text-based content and share insights to keep the platform clean from all harmful elements.

What Are the Challenges of Content Moderation Systems?

With content creation being at an all-time high presently, using generative AI tools to remove inappropriate content has become essential. Undeniably, using such tools is beneficial. However, implementing such systems has a few challenges, such as:

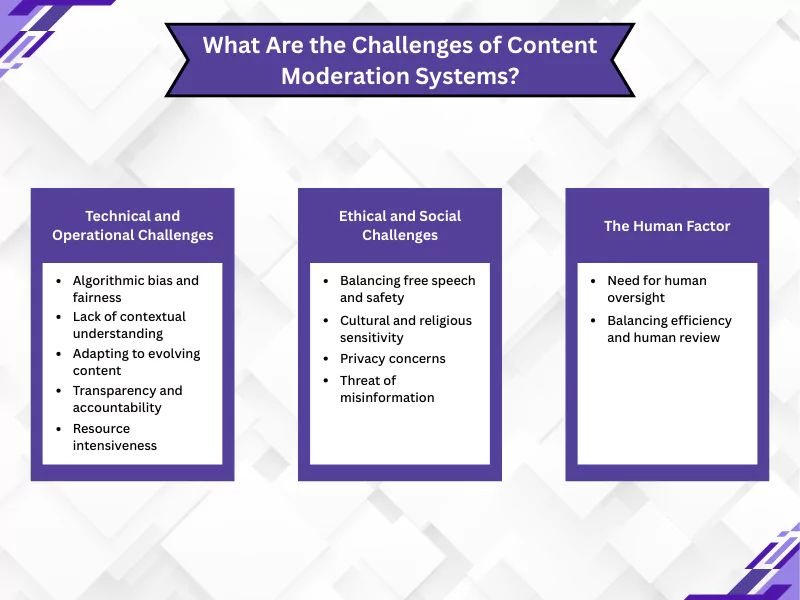

A. Technical and Operational Challenges

- Algorithmic bias and fairness – Artificial intelligence in social media content may inherit and amplify biases present in their training data. It leads to an unfair or discriminatory process when you moderate content using AI.

- Lack of contextual understanding – AI often struggles to understand the nuances, sarcasm, humor, and coded language. It leads to misinterpretations of user intent and flagging appropriate content as harmful, and often missing out on the actual harmful content.

- Adapting to evolving content – The rapid evolution of online content and conversation, which includes new trends, cultural references, and content that AI creates, makes it difficult for content filtering tools to keep up.

- Transparency and accountability – Machine learning moderation is complex, and makes it difficult for users to understand the reason the content was moderated. It also affects their ability to challenge the decisions.

- Resource intensiveness – Smaller organizations might face problems in developing and maintaining AI models for content moderation. Significant data, resources, and expertise are necessary to enable AI to moderate content, making it difficult for smaller organizations.

B. Ethical and Social Challenges of Harmful Content

- Balancing free speech and safety – One of the major challenges of AI content moderation is to effectively filter out harmful content without harming legitimate expression. Since the algorithms can potentially flag controversial but important viewpoints, it becomes a challenge to moderate content.

- Cultural and religious sensitivity – AI systems do not have the necessary training to recognize cultural nuances, potentially leading to content being misjudged on the basis of cultural context.

- Privacy concerns – Ethical issues in AI moderation also include concerns about user privacy and demands the need for strong data protection. The models scan large volumes of data, thus raising such concerns.

- Threat of misinformation – There are chances of AI systems being exploited to spread misinformation, thus making AI-generated misinformation and deepfakes a continuous battle.

C. The Human Factor

- Need for human oversight – While the power of AI in content moderation cannot be denied, it cannot handle all cases. Human oversight is essential for complex or nuanced decisions, contributing to burnout and mental health impacts.

- Balancing efficiency and human review – Hybrid moderation combines AI and human moderators for reviewing content. However, finding the right balance between AI and human judgment is difficult.

It is necessary to weigh in both the pros and cons before implementing AI moderation tools for content moderation.

The Future of AI-Powered Content Moderation

Content moderation is an essential process considering the increasing amount of user-generated content on social media. While it has both advantages and disadvantages, the future holds a lot of possibilities. Discover how AI content moderation will be more scalable and consistent in the near future:

A. More Sophisticated Algorithms

The future advancements of machine learning and natural language processing will lead to AI models that will be more accurate and will be able to understand the nuances.

B. Contextual Understanding

Future models will have the ability to grasp the context of content. It will also reduce misinterpretations and classify diverse content more accurately.

C. Continuous Learning

Integrating reinforcement learning and user feedback will help AI systems learn continuously from their decisions and adapt to the evolving online behaviors and new types of content.

D. Multimodal AI

Integrating multimodal AI will help in simultaneous text, image, and video analysis, thus helping in more comprehensive content verification.

AI can analyze different types of content, and soon it will help in more accurate and consistent content moderation.

Conclusion,

Moderating content on social media is crucial to keep the platform safe and secure. Using AI tools has proved to be beneficial to assess and analyze huge volumes of content. While AI can scan a massive amount of content, human moderators are still important. It is necessary to review the content before delivering the final result.

The technology is scalable and will soon be able to deliver more consistent and accurate results. Businesses are already implementing AI tools to moderate content to ensure they abide by the platform and community guidelines.

Frequently Asked Questions

How does AI help with moderation issues on social media?

AI can help with quick filtering, detection, and review of massive volumes of user-generated content. The models use natural language processing and computer vision to identify harmful content, thus reducing human workload and speeding up moderation.

How has content moderation become challenging with social media growth?

Content moderation has become more challenging with social media growth because of the increased chances of misinformation, harmful speech, or explicit visuals in user-generated content. Moderation has become difficult since numerous posts are posted every day on social media. As a consequence, ensuring community safety and authenticity of content has become challenging.

How does content moderation help scale your content operations?

Content moderation helps in scaling your content operations by automating the review process. AI models are able to process vast amounts of data at once, send risky content for review, and maintain safe online environments.

What should users do if they want to report content for violations?

Users can report content for violations directly on the social media platforms. The content is flagged for review as soon as it is reported. AI systems either assess the content instantly or flag it for human moderators.

Can AI moderation resources completely replace human moderators?

No, AI models cannot replace human moderators. While these models can detect patterns and help in scaling moderation, human moderators are important for nuanced cases. AI often struggles with context, sarcasm, or cultural references.

How does AI-powered content moderation become more effective over time?

AI moderation will become more effective as it starts learning from human feedback, adapts to evolving online trends, and improves contextual understanding. Continuous model training enhances accuracy and helps reduce errors.

- AI vs Human Content Moderation: Who Does Better in Content Filtering? - November 21, 2025

- How AI Powered Content Moderation Is Changing Social Media? - September 26, 2025

- How Does AI Improve Content Moderation on Dating Platforms? - July 17, 2025