Podcasts have become increasingly popular over time. With over two million active podcasts and hours of digital media produced daily, the demand for accurate and accessible content has increased significantly. Automated transcription has been in use for years to make the audio searchable and available for everyone.

But a normal transcription only provides surface level results. The simple speech-to-text result is unable to capture the contextual data used in the audio. As a consequence, it creates a gap in context, limiting the accessibility of content, its discoverability, and repurposing potential.

This is why transcriptions with AI-based audio annotation become essential. It goes beyond simple transcription, labeling who is speaking, what is happening, and how things are said. The technology transforms a normal text file into a multi-layered, structured data asset.

So, how does the technology add intelligence to a simple text file? It happens in layers; let’s explore.

AI-based audio annotation can be defined as the process of using artificial intelligence to label and categorize audio data. The process involves applying metadata, tags, or other descriptive information to audio recordings. This data helps train AI and machine learning models for tasks like human speech recognition, audio analysis, and sound event detection. The objective is to help AI models understand long audio files.

Audio annotation for voice assistants enables in-car command systems, call center analytics, and much more.

Understanding the layers of annotation will help you learn more about podcast transcriptions using audio annotation.

The Layers of Annotated Audio Data: Adding Color to Transcriptions with AI-Based Audio Annotation

As discussed earlier, AI-based audio annotation adds the necessary layers to add context to media transcriptions. It converts a normal text into a rich and detailed map. The following components will help you understand the importance of speech-to-text transcription with AI-based audio annotation:

A. Speaker Diarization: Solving the ‘Who Said That?’ Puzzle

The process helps in identifying and labeling who is speaking at any point in time. Instead of sharing a simple text, it attributes each speaker in a conversation.

It helps in understanding the content easily, making interviews, multi-host podcasts, and panel discussions easily readable.

Here’s an example:

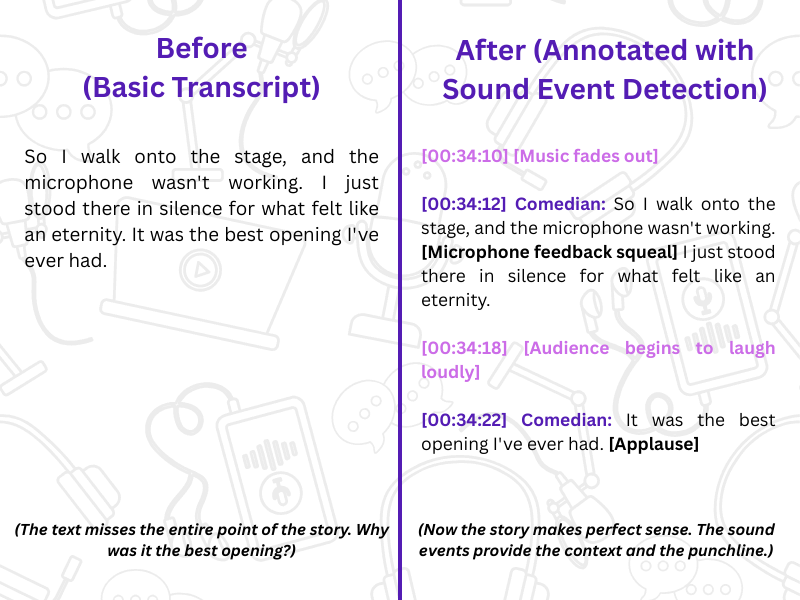

B. Sound Event Detection: Capturing the Full Auditory Scene

This machine learning data annotation is about identifying and tagging essential non-speech sounds that provide context, emotion, or narrative hints.

Since these sounds are a crucial part of storytelling and accessibility. For the audience who are unable to hear, it helps the content creators pinpoint the engaging moments through accurate speech-to-text recognition with AI.

The following example will help you understand the automation process and workflow better:

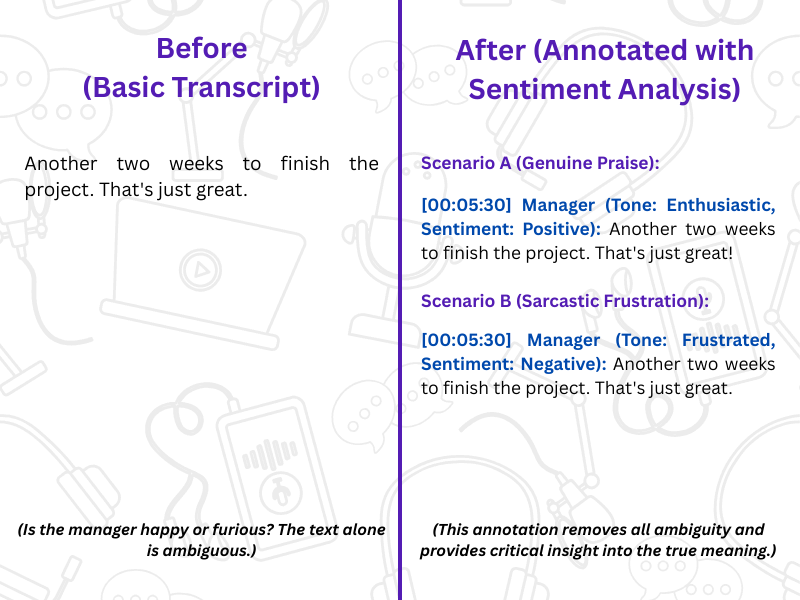

C. Sentiment and Emotion Analysis: Understanding How It was Said

A normal script fails to capture the underlying sentiment or emotion. This conversational audio annotation technique helps analyze the speaker’s vocal tone, pitch, and pace to understand the sentiments and emotions.

The process is important because it helps content creators understand audience reaction and respond to each comment accordingly.

The following example will help you gain a proper understanding of the need for high-quality annotated audio data:

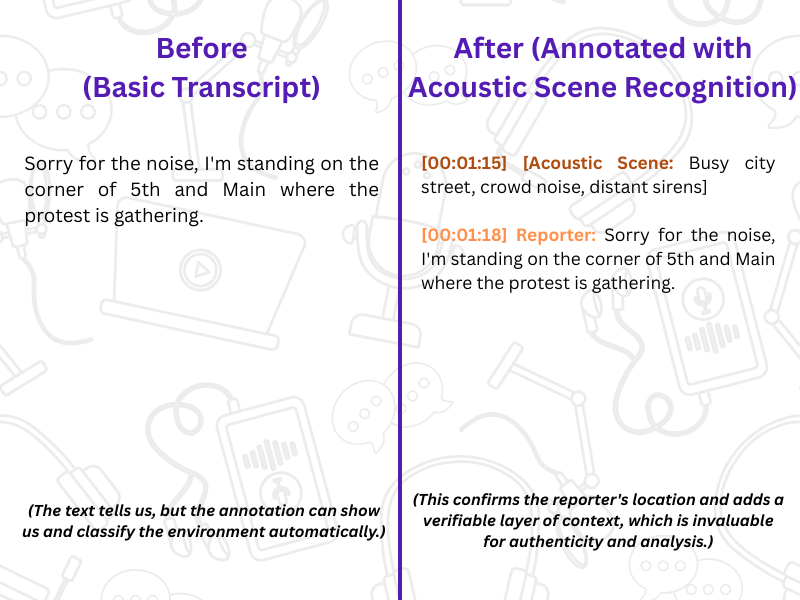

D. Acoustic Scene Recognition: Identifying the Recording Environment

The AI understands and identifies the background environment where the recording was done.

This is crucial for journalistic reports, documentary-style podcasts, or on-the-go recordings. Consequently, it helps explain the background noise, thus adding a layer of authenticity to the real-world audio segment, thus helping in a better human-computer interaction.

Read the example for a better understanding of automation:

The Best Uses of Audio Data Annotation for Speech Recognition

The layers have cleared how AI audio annotation helps make audio transcripts better and more understandable for the audience. The perks of implementing the process will help you understand the importance better for audio transcription annotation for podcasts:

A. For Content Creators and Marketers

Annotated transcripts are powerful instruments for content strategy and promotion.

➤ Easy Content Repurposing – Content creators and marketers can use speaker diarization to extract quotes from speakers in a podcast to create a blog post. Further, they can easily find the most engaging sections and use them for promotions or social media videos.

➤ Better SEO – The structured dataset helps Google index not just keywords, but also who said them. This helps the audience get answers to long-tail keywords that a basic transcript cannot satisfy.

➤ Deeper Audience Insights – Proper speech annotation leads to accurate sentiment analysis and shares a roadmap to audience engagement. The data helps you plan your future content.

B. For Audience and User Experience (UX)

A well-annotated transcript increases engagement and turns a passive listening experience into an active and interactive one.

➤ Radical Accessibility – This is one of the major benefits of AI-based audio annotation. For the deaf and hard-of-hearing community, this transcript adds the much-needed sound event detection.

➤ Improved Search and Navigation – The transcript becomes easy to navigate with proper audio data annotation. Listeners can click on a speaker’s name to move to the point where they speak instead of going through the entire video.

C. For Businesses and Researchers

Annotated data helps in data analysis, thus helping businesses and researchers in making proper decisions.

➤ Qualitative Data Analysis at Scale – Speaker diarization and sentiment analysis help managers understand and respond to different comments. It helps differentiate a positive comment from a negative one and respond accordingly.

➤ Compliance and Monitoring – AI systems help flag keywords or phrases for compliance review. Sentiment analysis even helps flag calls where customer queries sound distressed, thus helping in proactive action.

All these make AI-based audio annotation helpful for media content transcription automation and podcasts.

Best Practices for Transcriptions with AI-Based Audio Annotation

Companies like AnnotationBox, offering audio transcription services, follow the best practices to ensure the transcriptions with AI-based audio annotation are done correctly. Here’s a look at some of the best practices:

A. Data Preparation and Input

For data preparation and input, the companies follow these practices:

➤ High-quality audio – The companies ensure the audio is clean, noise-free, and standardized in volume and format for more accurate AI models.

➤ Contextual data – They collect different audio recordings from different sources relevant to the intended purpose of AI models.

➤ Data cleansing – The process involves filtering out irrelevant information and standardizing data formats to maintain quality standards.

B. Annotation Process

The annotation process involves:

➤ Clear guidelines – Developing clear and detailed annotation guidelines that define the labels clearly and have example annotations for consistency.

➤ Annotation technique – Selecting the right AI audio annotation techniques for transcription accuracy.

➤ Hybrid approach – Combining both AI tools and human review to ensure fast and accurate annotations.

➤ Contextual models – Providing context-specific language models to enhance the accuracy of understanding industry-specific terms.

C. Quality Assurance and Iteration

The process involves proper quality assurance and iteration. Here are the steps that are followed:

➤ Multi-stage QA – Implement a strong QA process that includes peer reviews, expert validation, and random spot-checks.

➤ Annotator training and feedback – Training annotators continuously and refining guidelines based on model performance and feedback.

➤ Iterative refinement – Using an iterative approach for reviewing and improving annotations and processes over time.

D. Data Management and Finalization

The data management and finalization step involves:

➤ Standardized format – Converting final annotations to a structured, machine-readable format like JSON or XML, including sitemaps.

➤ Model training – Integrating the high-quality annotated data with the rest of the training dataset for AI models.

➤ Privacy and compliance – Anonymizing data and adhering to all relevant privacy regulations.

All these steps are followed to transcribe data for improving podcasts. You must avail audio annotation services to get things done accurately.

Before we end the discussion, let’s take you through the future and challenges.

The Road Ahead: Future and Challenges of Audio Intelligence and Speech Annotation

The future holds a lot of possibilities for audio annotation. Here’s what you can expect:

A. The Future of Audio Annotation

➤ Real-time audio annotation – Expect livestreams with automatic speaker labels, sound effects, and sentiment indicators appearing in real time.

➤ Cross-modal analysis – A combination of audio annotation and video analysis for understanding who is speaking and facial expression simultaneously.

➤ Generative summaries – AI will soon be able to create a concise and accurate summary of a podcast episode with key quotes and emotional highlights.

B. Current Challenges of Audio Annotation

➤ Crosstalk – One of the major challenges of audio annotation is handling situations where multiple people speak at the same time.

➤ Accents and jargon – Unique terms and different accents can be difficult for voice AI to understand.

➤ Nuance – Sarcasm and complex human emotion remain a problem for AI.

More than Words: The New Standard for Media Intelligence

Audio annotation has changed the way podcasts are heard. The objective is to transform a transcript into a strategic data asset. The future looks bright for voice technology, and soon we can experience podcasts differently. The data annotation services are also working on making audio annotation better, following the best practices.

AI-based audio annotation is not only about improving accuracy and audio quality; it is also about discovering the hidden value, context, and potential within each audio file. Understand the benefits of AI audio annotation in transcription services and avail it for accurate results.

Frequently Asked Questions

What is the process of audio annotation, and why is it important for AI training?

Audio annotation is the process of labeling audio data with metadata, such as speaker identification, acoustic events, sentiment, and transcription. The process helps AI models learn and recognize natural language, voice interactions, and acoustic scenes properly. As a consequence, it helps intent classification, recognition, and interaction in voice-enabled applications.

How does audio annotation improve the quality of transcriptions for podcasts and media?

Audio annotation is not only about speech-to-text transcription. The process adds speaker diarization, sound event detection, sentiment analysis, and acoustic scene recognition. The layered annotation helps make transcripts more accessible and interactive.

What role does quality assurance play in the process of labeling audio data for long audio files?

There are multiple stages of validation in quality assurance. The multi-stage validation helps ensure annotation accuracy and that the results abide by the annotation guidelines. As a consequence, it helps generate accurate, high-quality audio training data that the algorithms depend on to recognize speech accurately and voice notes across multiple sources.

What are the types of acoustic events that are commonly annotated in audio datasets?

The common types of acoustic events include background noises, like music, applause, microphone feedback, etc. The AI models can detect and interpret context in audio streams, thus making them better for podcasts, media transcriptions, and smart home devices.

What are the types of acoustic events that are commonly annotated in audio datasets?

The common types of acoustic events include background noises, like music, applause, microphone feedback, etc. The AI models can detect and interpret context in audio streams, thus making them better for podcasts, media transcriptions, and smart home devices.

How do annotation guidelines help maintain consistent audio quality for voice assistants?

The annotation guidelines help get accurate annotations by defining clear labels, an annotation schema, and timestamping rules. Availing professional annotation services is recommended to ensure proper and accurate data for AI interactions and help AI models recognize audio across multiple channels. They also customize the guidelines tailored to your requirements.

- Comparing Manual vs Automated Image Annotation: Which Is Better in 2026? - December 29, 2025

- How to Master Audio Data Labeling for AI Accuracy in 2026 - November 18, 2025

- The Importance of Data Security in E Commerce Audio Annotation - October 30, 2025